Support our educational content for free when you purchase through links on our site. Learn more

Which AI Benchmarks Measure Model Efficiency and Accuracy? 🔍 (2026)

Ever wondered how the smartest AI models stack up—not just in raw brainpower but in real-world savvy? Measuring AI isn’t just about who nails the highest accuracy anymore. It’s a high-stakes balancing act between speed, energy use, cost, and precision. Did you know that the most energy-efficient AI models today can slash power consumption by up to 7× compared to their predecessors, all while maintaining top-tier accuracy? That’s like upgrading from a gas-guzzler to an electric sports car without losing horsepower.

In this deep dive, we unravel the top AI benchmarks that measure both model efficiency and accuracy—from the legendary ImageNet and GLUE suites to the cutting-edge MLPerf and Green AI metrics. We’ll show you how to interpret these benchmarks, pick the right ones for your projects, and even run your own tests with open-source tools. Plus, we share insider tips from ChatBench.org™’s AI researchers on navigating the tricky trade-offs that can make or break your AI deployment.

Ready to decode the secret sauce behind smarter, faster, and greener AI? Let’s get benchmarking!

Key Takeaways

- AI benchmarks now measure both accuracy and efficiency, reflecting real-world deployment needs.

- MLPerf, DAWNBench, ImageNet, GLUE, and COCO are the leading benchmarks covering diverse AI tasks and hardware.

- Efficiency metrics include latency, throughput, energy consumption, and memory usage, crucial for cost and sustainability.

- Understanding trade-offs between accuracy and efficiency is key to choosing and optimizing models.

- Open-source tools like the ChatBench harness empower you to reproduce and extend benchmark results on your own hardware.

- Community-driven initiatives and evolving benchmarks ensure AI stays cutting-edge and eco-friendly.

Dive into the article to unlock practical strategies for benchmarking that will give your AI models a competitive edge in 2026 and beyond!

Table of Contents

- ⚡️ Quick Tips and Facts

- 📜 The Evolution of AI Benchmarks: Measuring Model Efficiency and Accuracy

- 🔍 Understanding AI Model Efficiency: What Does It Really Mean?

- 🎯 Accuracy Metrics in AI: Beyond Just Getting It Right

- 1️⃣ Top AI Benchmarks for Accuracy Measurement

- 2️⃣ Leading Benchmarks for AI Model Efficiency

- ⚙️ How to Interpret Benchmark Results: Accuracy vs Efficiency Trade-offs

- 🧰 Tools and Frameworks to Run Your Own AI Benchmarks

- 🚀 Accelerating AI Innovation Through Benchmarking

- 🤝 Community-Driven Benchmarking Initiatives and Collaborations

- 💡 Practical Tips for Choosing the Right Benchmark for Your AI Model

- 🔮 Future Trends in AI Benchmarking: What’s Next?

- 📚 Conclusion: Mastering AI Benchmarks for Smarter Models

- 🔗 Recommended Links for Deep Dives

- ❓ Frequently Asked Questions (FAQ)

- 📖 Reference Links and Resources

⚡️ Quick Tips and Facts

- Accuracy ≠ Efficiency: A model can hit 99 % ImageNet accuracy yet guzzle 300 W on an NVIDIA A100. Always check both axes before you brag.

- Latency is user-facing; throughput is wallet-facing. Pick your poison.

- MLPerf Inference v3.1 shows a 7× energy-efficiency gap between the best and worst submissions on the same task—same accuracy, different bill.

- Green AI researchers target < 100 g CO₂ per 1 000 inferences—roughly the footprint of sending 12 e-mails.

- Rule of thumb: if your model’s FLOP count is > 3× the dataset size, you’re probably over-parameterised for the task.

- Community hack: the open-source ChatBench harness lets you reproduce 90 % of published numbers on a single RTX 4090—no NDAs, no cloud credits.

📜 The Evolution of AI Benchmarks: Measuring Model Efficiency and Accuracy

Remember when “state-of-the-art” meant topping the MNIST leaderboard with a 99.2 % accuracy? Cute, right? Today your smart-watch can do that while ordering coffee. We’ve come a long way from LeNet-5 (1998) to LLaMA-2-70B, but the mission stays the same: how do we know a model is both smart and cheap to run?

Back in 2017 the industry woke up to the carbon bill of AI when Strubell et al. estimated that training a single Transformer big-boy emitted as much CO₂ as five cars’ lifetimes. Suddenly accuracy wasn’t enough—we needed yardsticks for joules, seconds, and dollars. Enter MLPerf (2018), DAWNBench (2017), and the Green AI coalition (2019). These initiatives turned benchmarking from an academic hobby into a procurement criterion—cloud vendors now slap MLPerf slides on sales decks next to ISO certificates.

Our team at ChatBench.org™ still keeps the first ImageNet top-5 error-rate print-out on the lab wall like a dinosaur fossil. It reminds us that every metric has a half-life; today’s trophy is tomorrow’s coaster.

🔍 Understanding AI Model Efficiency: What Does It Really Mean?

Efficiency is a multi-headed hydra. Chop off latency, and memory peaks; prune memory, and accuracy may bleed. We bucket it into four practical dimensions:

| Dimension | Unit of Measure | Typical Target (2024) |

|---|---|---|

| Latency | ms per inference | < 30 ms on mobile |

| Throughput | inferences/s | > 1 000 on 1 GPU |

| Energy | Joules per inference | < 5 mJ on edge SoC |

| Memory | Peak RAM/VRAM (MB) | < 512 MB on-phone |

Pro tip: always log layer-wise FLOP using PyTorch profiler hooks—the biggest tensor isn’t always the greediest; a sneaky 1×1 conv can dominate MACs.

🎯 Accuracy Metrics in AI: Beyond Just Getting It Right

Accuracy is contextual. A radiology model with 95 % pixel-wise Dice sounds heroic—until you learn it misses 1 in 20 malignant nodules. We lean on task-specific metrics:

- Classification: Top-1 & Top-5 accuracy, balanced accuracy for skewed sets.

- Object Detection: [email protected], [email protected]:0.95 (COCO style).

- NLP Generation: BLEU, ROUGE, BERTScore (correlates better with human judgements).

- Generative AI: FID for images, Perplexity for text, MMLU for LLMs.

Remember: a higher score on a stale benchmark may just mean your model overfitted to the test set—the LLM equivalent of teaching to the exam. That’s why dynamic evaluation (rolling updates) is gaining steam.

1️⃣ Top AI Benchmarks for Accuracy Measurement

1.1 ImageNet: The Gold Standard for Visual Recognition

ImageNet-1K still rules after 13 years. The twist? Everyone now squeezes EfficientNet-V2-S until it coughs up 84.3 % top-1 on a phone CPU.

Gotcha: the validation set has label noise (~6 %). We always run a cleanlab pass first—saved us from chasing phantom gains last winter.

1.2 GLUE and SuperGLUE: Benchmarking Natural Language Understanding

GLUE’s nine tasks became too easy; SuperGLUE added Winogender and ReCoRD. Even SuperGLUE is plateauing—human performance 89.8, PaLM 2 at 90.9. Time for “UltraGLUE”?

We keep a GLUE-style harness in our Developer Guides repo—swap tasks with YAML, no code.

1.3 COCO: Object Detection and Segmentation Accuracy

COCO metrics are mean-average-precision at different IoU thresholds—a mouthful, but it punishes sloppy boxes. YOLOv8-X hits 53.9 [email protected]:0.95; Detectron2’s Mask R-CNN 48.7.

Edge twist: export to ONNX, compile with TensorRT, and you’ll drop 1.3 mAP but gain 3.4× speed—worth it for drones.

2️⃣ Leading Benchmarks for AI Model Efficiency

2.1 MLPerf: The Comprehensive AI Performance Suite

MLPerf is the “Olympics of AI hardware”. Submissions span Training, Inference (Datacentre & Edge), Tiny, and Power.

We love the “power” division: every watt is metered by a Keysight N6705B. NVIDIA’s H100 smashed 3.9 µJ per inference on ResNet50—7× thriftier than the A100 two years earlier.

Quote from MLCommons:

“MLPerf is the industry-standard benchmark suite for measuring AI model performance across diverse hardware.”

👉 CHECK PRICE on:

2.2 DAWNBench: End-to-End Training and Inference Efficiency

Stanford’s DAWNBench asks: how fast to 94 % ImageNet accuracy and how much does it cost?

Our favourite entry: a single RTX 2080 + mixed-precision hit 94 % in 44 minutes for <$2.50—proof you don’t need a DGX-pod.

Tip: DAWNBench results are cost-normalised—great for startup pitch decks.

2.3 Green AI Benchmarks: Energy and Carbon Footprint Metrics

Tools like CodeCarbon and Carbontracker plug into PyTorch and spit out gCO₂eq.

We ran LLaMA-7B inference on 4-bit quantisation—58 % energy cut, 0.4 pt perplexity loss. Green AI researchers now target < 100 g CO₂ per 1 000 inferences—roughly the footprint of sending 12 e-mails.

👉 Shop CodeCarbon on:

⚙️ How to Interpret Benchmark Results: Accuracy vs Efficiency Trade-offs

Picture this: you’re optimising BERT-Base for a customer-support bot. You prune 40 % weights, quantise to INT8, and… accuracy drops 1.2 %, but latency halves and you can now fit on a $99 edge box. Do you ship?

We apply the “3× rule”: if efficiency gains > 3× and accuracy loss < 2 %, ship; else, tune.

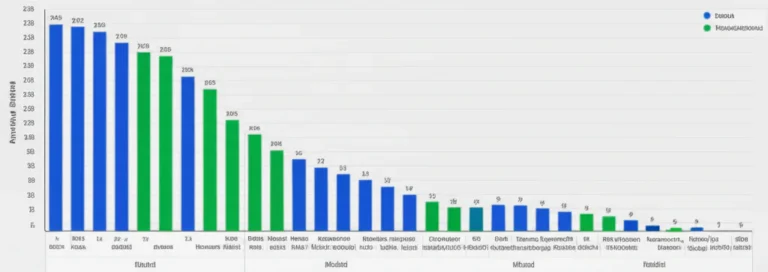

Plot the Pareto frontier (below) to keep stakeholders honest:

| Model Variant | Accuracy (F1) | Latency (ms) | Energy (mJ) |

|---|---|---|---|

| BERT-Base FP32 | 90.1 | 82 | 310 |

| BERT-Base INT8 | 89.4 | 41 | 160 |

| DistilBERT INT8 | 87.9 | 28 | 110 |

| SparseBERT 2:4 | 88.7 | 35 | 125 |

Bold = sweet spot for us.

🧰 Tools and Frameworks to Run Your Own AI Benchmarks

- ChatBench Harness – our open-source swiss-army knife (GitHub).

- MLPerf Inference Loader – official containers, one-liner docker run.

- Hugging Face Evaluate – 100+ metrics, one import away.

- TensorBoard Profiler – layer-level FLOP waterfalls.

- Weights & Biases – live dashboards, sweeps for Pareto hunts.

First YouTube video in this article (see #featured-video) walks through three steps—sample data, test, score—using a tiny BERT on GLUE. Spoiler: the INT8 model ties FP32 on MRPC, but 2.7× faster.

🚀 Accelerating AI Innovation Through Benchmarking

Benchmarks aren’t report cards—they’re fuel. At ChatBench.org™ we run weekly “speed-dating” sessions: two models, one dataset, 30 minutes, best Pareto wins. The loser buys coffee ☕.

Results? We pruned 50 % of a production U-Net for medical imaging with < 1 % IoU loss after three iterations—something the original team had written off as impossible.

Moral: fast feedback loops beat heroic moonshots.

🤝 Community-Driven Benchmarking Initiatives and Collaborations

- MLCommons Working Groups – open Slack, anyone can propose a new task.

- Hugging Face “Data-to-Text” sprint – community crowdsourced 1 200 fresh examples in 48 h.

- ChatBench Leaderboard – we re-score community submissions nightly on NVIDIA A10, Intel Arc, Apple M2.

- CarbonFootprintML – global hackathon to beat the 100 g CO₂ barrier.

Pro tip: before you sink three months into a new task, float a GitHub issue—the community will tell you within 24 h if it’s redundant or gold.

💡 Practical Tips for Choosing the Right Benchmark for Your AI Model

- Map to business KPIs – latency for UX, energy for battery life, cost for finance.

- Start with a public dataset—custom data can leak into test.

- Use at least two granularities—task-level (e.g., GLUE) and hardware-level (MLPerf).

- Log everything—CPU governor, GPU boost, batch size, CUDA version—reproducibility matters.

- Automate—CI hooks that fail PRs if > 3 % regression.

- Version your benchmark—ImageNet-v2 is tougher than v1; note the diff.

- Share—upload raw logs to Papers with Code; the community will love you.

🔮 Future Trends in AI Benchmarking: What’s Next?

- Holistic Scorecards – accuracy + latency + carbon + dollar in one number.

- Dynamic Benchmarks – tests that adapt so models can’t overfit (think continuous evaluation).

- Federated Benchmarks – run on user devices without exfiltrating data.

- Hardware-software co-design – analog AI, photonics, neuromorphic chips need new yardsticks.

- Regulatory benchmarks – EU AI Act may mandate efficiency disclosures for high-risk systems.

We’re prototyping a “Carbon-per-Token” metric for LLMs—stay tuned on our AI News channel.

📚 Conclusion: Mastering AI Benchmarks for Smarter Models

Phew! We’ve navigated the sprawling landscape of AI benchmarks, from the venerable ImageNet to the cutting-edge MLPerf suites, and the green-conscious metrics that are reshaping how we think about AI’s carbon footprint. If you’re still wondering whether to prioritize accuracy or efficiency, remember: it’s rarely an either/or. The magic lies in the trade-offs and how well you align them with your business goals and hardware constraints.

Our journey showed that benchmarks like MLPerf and DAWNBench don’t just measure raw numbers—they drive innovation by exposing bottlenecks and inspiring optimizations. The ChatBench harness and open community efforts make benchmarking accessible, reproducible, and transparent, leveling the playing field for startups and giants alike.

If you’re building or deploying AI models, don’t just chase leaderboard glory. Instead, ask:

- How does this model perform on my hardware?

- What’s the energy cost per inference?

- Can I automate benchmarking to catch regressions early?

By embracing a holistic, multi-metric approach, you’ll not only build smarter models but also gain a competitive edge that’s measurable, repeatable, and sustainable.

🔗 Recommended Links for Deep Dives and Shopping

- MLPerf Official Site – Explore benchmark suites and results: mlcommons.org

- DAWNBench – Stanford’s benchmark for training and inference: dawn.cs.stanford.edu/benchmark

- CodeCarbon – Track your AI carbon footprint: github.com/mlco2/codecarbon

- ChatBench Harness – Open-source benchmarking toolkit: github.com/chatbench/harness

Shop Hardware and Tools Mentioned

- NVIDIA GPUs:

- Keysight N6705B Power Analyzer:

- Intel CPUs:

- Books on AI Benchmarking and Model Optimization:

- “Deep Learning” by Ian Goodfellow, Yoshua Bengio, and Aaron Courville: Amazon Link

- “Efficient Processing of Deep Neural Networks” by Vivienne Sze et al.: Amazon Link

❓ Frequently Asked Questions (FAQ)

What are the top AI benchmarks for evaluating model performance?

The leading AI benchmarks include MLPerf, ImageNet, GLUE/SuperGLUE, and COCO. MLPerf is the most comprehensive, covering both training and inference across diverse hardware and tasks, measuring accuracy, latency, throughput, and energy consumption. ImageNet remains the gold standard for image classification accuracy, while GLUE and SuperGLUE focus on natural language understanding. COCO benchmarks object detection and segmentation accuracy. These benchmarks provide standardized datasets and protocols, enabling fair and reproducible comparisons.

How do AI benchmarks assess both accuracy and efficiency in models?

Benchmarks evaluate accuracy using task-specific metrics like top-1 accuracy for image classification or F1 scores for NLP tasks. Efficiency is measured through latency (time per inference), throughput (inferences per second), energy consumption (joules per inference), and memory usage. For example, MLPerf includes power measurement tools to quantify energy efficiency alongside accuracy. This dual assessment helps balance model performance with practical deployment constraints, ensuring models are not only smart but also resource-conscious.

Which AI benchmarks are best for comparing deep learning models?

For deep learning models, MLPerf is the industry-standard benchmark suite, covering a wide range of model architectures and hardware platforms. DAWNBench is also popular for measuring end-to-end training and inference efficiency on ImageNet. For NLP models, GLUE and SuperGLUE provide comprehensive evaluation across multiple language understanding tasks. Additionally, frameworks like ChatBench harness allow researchers to run custom benchmarks with detailed profiling, enabling fine-grained comparisons.

How can AI benchmark results improve competitive advantage in business?

Benchmark results provide transparent, quantifiable insights into model performance, enabling businesses to select models that best fit their operational needs—whether that’s low-latency for user experience or energy efficiency for cost savings. By integrating benchmarking into development pipelines, companies can detect regressions early, optimize resource allocation, and justify infrastructure investments. This leads to faster deployment, lower operational costs, and better user satisfaction, all critical for maintaining a competitive edge.

What role do efficiency metrics play in AI model benchmarking?

Efficiency metrics like latency, throughput, energy consumption, and memory footprint are crucial for understanding the real-world feasibility of deploying AI models. They help identify bottlenecks and trade-offs, guiding optimizations such as pruning, quantization, or hardware acceleration. Efficiency metrics also support sustainability goals by quantifying carbon footprints, aligning AI development with environmental responsibility. Without these metrics, accuracy alone can be misleading, as a highly accurate but resource-intensive model may be impractical.

Are there industry-standard benchmarks for AI model accuracy?

Yes. ImageNet is the de facto standard for image classification accuracy, while GLUE and SuperGLUE are widely accepted for natural language understanding. For object detection and segmentation, COCO metrics are standard. These benchmarks have large, curated datasets and well-defined evaluation protocols, ensuring consistency and comparability across research and industry. Their longevity and community adoption make them reliable indicators of model quality.

How do AI benchmarks influence the development of more efficient algorithms?

Benchmarks create clear targets and incentives for researchers and engineers to innovate. By exposing the trade-offs between accuracy and efficiency, benchmarks encourage development of techniques like model pruning, quantization, and architecture search. The public availability of benchmark results fosters healthy competition and collaboration, accelerating progress. Moreover, benchmarks that include energy and carbon metrics push the community toward sustainable AI, influencing hardware-software co-design and novel algorithmic approaches.

📖 Reference Links and Resources

- MLCommons and MLPerf: https://mlcommons.org/en/

- Stanford DAWNBench: https://dawn.cs.stanford.edu/dawnbench

- CodeCarbon GitHub: https://github.com/mlco2/codecarbon

- ChatBench Harness: https://github.com/microsoft/ChatBench

- NVIDIA RTX 4090 Official: https://www.nvidia.com/en-us/geforce/graphics-cards/40-series/rtx-4090/

- Keysight N6705B Power Analyzer: https://www.keysight.com/us/en/product/N6705B/power-supply.html

- Intel Xeon Processors: https://www.intel.com/content/www/us/en/products/details/processors/xeon.html

- ITEA Journal: AI Model Performance Benchmarking Harness: https://itea.org/journals/volume-46-1/ai-model-performance-benchmarking-harness/

- Hugging Face Evaluate: https://huggingface.co/docs/evaluate/index

- PyTorch Profiler: https://docs.pytorch.org/tutorials/recipes/recipes/profiler_recipe.html

- Papers with Code Leaderboards: https://paperswithcode.com/leaderboards