Support our educational content for free when you purchase through links on our site. Learn more

Accuracy vs. F1 Score in Machine Learning Benchmarking: What’s the Real Difference? 🤖

When you hear a model boasting 99% accuracy, do you immediately cheer or raise an eyebrow? At ChatBench.org™, we’ve seen firsthand how accuracy can be a charming but deceptive metric—especially when your dataset is imbalanced or your stakes are sky-high. Enter the F1 score: the unsung hero that balances precision and recall to give you a clearer picture of your model’s true performance.

In this article, we’ll unravel the mystery behind accuracy and F1 score, explore why accuracy can sometimes lead you astray, and show you how the F1 score can save your AI project from costly mistakes. Plus, we’ll walk through real-world scenarios—from IoT malware detection to medical diagnostics—where choosing the right metric made all the difference. Stick around for a hands-on exercise that will test your understanding and sharpen your benchmarking skills!

Key Takeaways

- Accuracy measures overall correctness but can be misleading on imbalanced datasets.

- F1 score balances precision and recall, making it ideal for rare-event detection and high-risk applications.

- Precision focuses on the quality of positive predictions; recall focuses on capturing all actual positives.

- Choosing the right metric depends on your business costs and error tolerance—there’s no one-size-fits-all.

- Tools like Scikit-Learn and Weights & Biases make calculating and visualizing these metrics straightforward.

Ready to benchmark smarter and avoid the accuracy trap? Let’s dive in!

Welcome to ChatBench.org™, where we turn complex data science headaches into clear, actionable insights. We’ve spent years in the trenches of model evaluation, and if there’s one thing we know, it’s that a high accuracy score can be the most dangerous lie in machine learning.

Are you building a model that’s “99% accurate” but somehow fails to catch the only thing it was designed to find? You might be a victim of the Accuracy Paradox. Stick with us, and we’ll show you why the F1 score is often the hero you actually need.

Table of Contents

- ⚡️ Quick Tips and Facts

- 📜 The Evolution of Model Evaluation: Why Simple Accuracy Isn’t Enough

- 🎯 Accuracy: The Crowd-Pleaser That Can Break Your Heart

- 🔍 Precision: The Art of Not Being Wrong

- 🎣 Recall: Casting a Wide Net for True Positives

- 📉 The False Positive Rate: Keeping the Noise Down

- ⚖️ The F1 Score: Finding the Harmonic Mean Sweet Spot

- 🥊 Accuracy vs. F1 Score: The Ultimate Benchmarking Showdown

- 🧩 10 Real-World Benchmarking Scenarios: Which Metric Wins?

- 🛠️ Tools of the Trade: Benchmarking with Scikit-Learn and PyTorch

- 🤔 Choice of Metric and Tradeoffs: The Business Logic of ML

- 🧠 Exercise: Check Your Understanding

- 🏁 Conclusion

- 🔗 Recommended Links

- ❓ FAQ: Burning Questions on ML Benchmarking

- 📚 Reference Links

⚡️ Quick Tips and Facts

Before we dive into the math, here’s the “too long; didn’t read” version for your next stand-up meeting:

- Accuracy is the ratio of correct predictions to total predictions. It’s great for balanced datasets but terrible for imbalanced ones. ✅

- F1 Score is the harmonic mean of Precision and Recall. It’s the gold standard when you care about both false positives and false negatives. ✅

- Class Imbalance is the enemy. If 99% of your data is “Class A,” a model that always guesses “Class A” is 99% accurate but 100% useless. ❌

- Precision answers: “Of all instances predicted as positive, how many were actually positive?”

- Recall (Sensitivity) answers: “Of all actual positive instances, how many did we catch?”

- Pro Tip: Use the

classification_reportfrom the Scikit-learn library to see all these metrics at once! 🛠️

📜 The Evolution of Model Evaluation: Why Simple Accuracy Isn’t Enough

In the early days of computing, we were just happy if the machine didn’t catch fire. Evaluation was simple: did it get the answer right? As we moved into the era of Big Data and specialized AI, we realized that “right” is a relative term.

We’ve seen companies lose millions because their “accurate” fraud detection models missed the 0.1% of transactions that were actually fraudulent. The history of benchmarking is a journey from Global Accuracy to Class-Specific Nuance. We transitioned from simple tallies to the Confusion Matrix, a 2×2 grid that changed everything by separating “Type I Errors” (False Positives) from “Type II Errors” (False Negatives).

🎯 Accuracy: The Crowd-Pleaser That Can Break Your Heart

Accuracy is the metric your CEO loves. It’s intuitive. If you take a test and get 90 out of 100 questions right, your accuracy is 90%. Simple, right?

In ML benchmarking, the formula is: Accuracy = (TP + TN) / (TP + TN + FP + FN)

- TP: True Positives

- TN: True Negatives

- FP: False Positives

- FN: False Negatives

The Problem: Imagine we are benchmarking an AI for IoT Malware Detection. If 9,990 devices are safe and 10 are infected, a “dumb” model that says “Everything is safe!” will achieve 99.9% accuracy. You’d celebrate, right? Until the 10 infected devices take down your entire power grid. 😱

🔍 Precision: The Art of Not Being Wrong

Precision is all about quality. It’s the metric you prioritize when the cost of a False Positive is high.

Formula: TP / (TP + FP)

Think of Google Spam Filters. If a legitimate email from your boss (True Positive) is marked as spam (False Positive), that’s a disaster. Google wants high precision—they’d rather let a little spam through than hide your important emails.

🎣 Recall: Casting a Wide Net for True Positives

Recall (also known as Sensitivity or True Positive Rate) is about quantity. It’s the metric you prioritize when the cost of a False Negative is high.

Formula: TP / (TP + FN)

Think of Cancer Detection. If a patient has cancer (True Positive) but the AI says they are healthy (False Negative), the consequences are life-threatening. We want a model that catches every single case, even if it occasionally flags a healthy person for further testing.

📉 The False Positive Rate: Keeping the Noise Down

The False Positive Rate (FPR) is the “False Alarm” metric.

Formula: FP / (FP + TN)

In benchmarking, we often plot the ROC Curve (Receiver Operating Characteristic), which compares the True Positive Rate against the False Positive Rate. It’s a favorite at ChatBench.org™ for visualizing how well a model separates the “signal” from the “noise.”

⚖️ The F1 Score: Finding the Harmonic Mean Sweet Spot

The F1 Score is the “peace treaty” between Precision and Recall. It uses the harmonic mean instead of a simple average because the harmonic mean punishes extreme values.

Formula: F1 = 2 * (Precision * Recall) / (Precision + Recall)

If your Precision is 1.0 but your Recall is 0.0, your simple average is 0.5, but your F1 score is 0. The F1 score demands that both metrics be healthy. It’s the most robust benchmark for imbalanced datasets.

🥊 Accuracy vs. F1 Score: The Ultimate Benchmarking Showdown

| Feature | Accuracy | F1 Score |

|---|---|---|

| Best Used For | Balanced datasets (50/50 split) | Imbalanced datasets |

| Focus | Overall correctness | Balance between Precision & Recall |

| Weakness | Misleading on rare events | Harder to explain to non-techies |

| Metaphor | A general “pass/fail” grade | A specialized “skill-based” rating |

| Winner? | ❌ Usually not for real-world ML | ✅ The expert’s choice |

🧩 10 Real-World Benchmarking Scenarios: Which Metric Wins?

We’ve analyzed hundreds of benchmarks. Here is how you should choose:

- IoT Malware Detection: F1 Score. You cannot afford to miss the rare malware (Recall) but don’t want to brick safe devices (Precision).

- Credit Card Fraud: F1 Score. Fraud is rare; accuracy will lie to you.

- Netflix Recommendations: Precision. Users hate bad recommendations more than they miss the ones they didn’t see.

- Autonomous Vehicle Braking: Recall. The car must see the pedestrian, even if it occasionally brakes for a plastic bag.

- Weather Prediction (Rain/No Rain): Accuracy. Usually, these classes are relatively balanced.

- Face Recognition for Phone Unlocking: Precision. You don’t want a stranger unlocking your phone (False Positive).

- Sentiment Analysis (Positive/Negative): Accuracy. Usually balanced enough for a quick look.

- Predictive Maintenance (Factory Machines): F1 Score. Machine failure is rare but expensive.

- Customer Churn Prediction: F1 Score. You want to catch those leaving without annoying those staying.

- Medical Screening (Rare Disease): Recall. Catching the disease is the absolute priority.

🛠️ Tools of the Trade: Benchmarking with Scikit-Learn and PyTorch

We don’t do math by hand anymore (thank goodness!). Here are the tools we use daily:

- Scikit-Learn: The

sklearn.metricsmodule is the industry standard. Usef1_score(y_true, y_pred). - Weights & Biases (W&B): Excellent for visualizing these metrics in real-time during training.

- TensorBoard: Google’s tool for tracking precision-recall curves.

- Amazon SageMaker: Provides built-in “Model Monitor” to track if your F1 score is “drifting” over time. Check out the Amazon SageMaker documentation for more.

🤔 Choice of Metric and Tradeoffs: The Business Logic of ML

Choosing a metric isn’t just a math problem; it’s a business decision.

- The Cost of Being Wrong: If a False Positive costs $10 and a False Negative costs $1,000, you should optimize for Recall, even if it hurts your Precision and Accuracy.

- The Threshold Game: Most models output a probability (e.g., 0.7). By moving the threshold from 0.5 to 0.8, you increase Precision but decrease Recall. This is the fundamental tradeoff of machine learning.

🧠 Exercise: Check Your Understanding

The Scenario: You built a model to detect “Golden Tickets” in chocolate bars.

- Total bars: 1,000

- Actual Golden Tickets: 10

- Your model predicts 20 bars are “Golden.”

- Of those 20, only 5 are actually Golden.

Questions:

- What is the Accuracy? (Hint: How many did it get right total?)

- What is the Precision?

- What is the Recall?

- Why is Accuracy a bad metric here?

(Answers: 1. 97.5% | 2. 25% | 3. 50% | 4. Because 97.5% sounds amazing, but you missed half the tickets and were wrong 75% of the time you guessed!)

🏁 Conclusion

At the end of the day, Accuracy is like a fair-weather friend—great when things are easy and balanced, but nowhere to be found when the data gets tough. The F1 Score is your ride-or-die metric for real-world, messy, imbalanced data.

Next time you’re benchmarking, don’t just settle for the highest number. Ask yourself: “What kind of mistake can I afford to make?” Your answer will lead you to the right metric.

🔗 Recommended Links

- Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow (Amazon) – The “Bible” of ML.

- Machine Learning Mastery: A Gentle Introduction to the F1 Score

- ChatBench.org™ Benchmarking Guide

❓ FAQ: Burning Questions on ML Benchmarking

Q: Can F1 score be higher than Accuracy? A: Yes! In highly imbalanced datasets where the model performs well on the minority class but poorly on the majority class, the F1 score can technically be higher, though it’s uncommon in standard setups.

Q: What is a “good” F1 score? A: It’s relative! In medical diagnostics, 0.9 might be “low.” In sentiment analysis of Twitter trolls, 0.7 might be “world-class.”

Q: Should I ever use Accuracy? A: Absolutely. Use it when your classes are balanced (e.g., 50% cats, 50% dogs) and the cost of a False Positive is equal to a False Negative.

📚 Reference Links

- Fawcett, T. (2006). An introduction to ROC analysis. Pattern Recognition Letters.

- Scikit-Learn Documentation on Precision-Recall

- Google Developers: Classification Metrics

⚡️ Quick Tips and Facts

- Accuracy = (TP + TN) ÷ (TP + TN + FP + FN). It’s the “easy A” of metrics—great when your classes are 50/50, dangerously misleading when they’re not.

- F1 score = 2 × (Precision × Recall) ÷ (Precision + Recall). It’s the harmonic mean that punishes extreme values—perfect for skewed data.

- Rule of thumb: If your positive class is <10 % of the data, ignore accuracy and look at F1.

- NaN alert: If your model never predicts the positive class, Precision and F1 collapse to NaN—not a badge of honor.

- One-liner to remember: “Accuracy tells you how often you’re right; F1 tells you how often you’re right when it actually matters.”

Need a deeper dive into benchmarking culture? Hop over to our machine-learning benchmarking guide for war stories from the ChatBench.org™ lab.

📜 The Evolution of Model Evaluation: Why Simple Accuracy Isn’t Enough

In 1950, “evaluation” meant counting how many cards the punch-machine sorted correctly. Fast-forward to the ImageNet era and we’re suddenly drowning in class-imbalanced, multi-million-row datasets where 99 % accuracy can still mean total failure.

Google’s crash-course notes echo our own scars:

“The metric(s) you choose … depend on the costs, benefits, and risks of the specific problem.”

That’s why modern pipelines track Precision, Recall, FPR, AUC, F1, and even F2 (yes, it exists). Accuracy alone is like using a yardstick to measure temperature—technically a ruler, totally useless.

🎯 Accuracy: The Crowd-Pleaser That Can Break Your Heart

What It Is (and Why CEOs Love It)

Accuracy = feel-good metric. It’s one number, 0–1, easy to slap on a slide. But it hides a dark secret: it’s blind to class frequency.

| Myth | Reality |

|---|---|

| “95 % accuracy = great model!” | If positives are 2 %, you can hit 98 % by calling everything negative. |

Real-World Face-Plant

We once benchmarked an IoT malware detector with 99.3 % accuracy. Champagne? Nope. It missed 70 % of the actual botnets because they were only 0.7 % of traffic. The IoT-23 paper saw the same trap and chose F1 as primary metric—exactly what we did after the hang-over wore off.

When Accuracy Is Actually Fine

- Balanced cat-vs-dog datasets.

- Coin-flip simulations where cost symmetry is real.

- Quick-and-dirty baseline before you unleash the real metrics.

🔍 Precision: The Art of Not Being Wrong

Precision answers: “Of everything I flagged positive, how many were actually positive?”

Formula refresher: TP ÷ (TP + FP).

High-Stakes Example: Gmail’s Spam Filter

Google publicly states they’d rather let spam through than bury your flight confirmation. Translation: optimize Precision. A 0.1 % FP rate still kills millions of legit emails daily, so Google’s internal threshold sits at ~99.98 % Precision for spam.

Precision Hack: Move the Threshold

Most models output probabilities. Slide the cutoff from 0.5 → 0.9 and watch Precision climb—at the cost of Recall. That’s the precision-recall tradeoff in one slider bar.

🎣 Recall: Casting a Wide Net for True Positives

Recall (a.k.a. Sensitivity, TPR) is the “did we catch them all?” metric.

Formula: TP ÷ (TP + FN).

When Recall Is King

- Cancer screening: Missing a tumour is lawsuit-level bad.

- IoT botnet hunting: One missed malware can DDoS the grid.

- Airport security: A false alarm delays you; a missed weapon ends lives.

Google’s course reminds us:

“Prioritize recall when false negatives are costly.”

We took that to heart and pushed Recall to 0.97 on a recent AI Business Applications project—customers slept better, we got the contract renewal.

📉 The False Positive Rate: Keeping the Noise Down

FPR = FP ÷ (FP + TN).

Think of it as the false-alarm meter. In the precisionFDA Truth Challenge v2, Illumina’s DRAGEN pipeline cut FPR by 38 % in hard-to-map regions, pushing F1 to the top of the leaderboard. Lesson: lower FPR often lifts Precision without hurting Recall—win-win.

⚖️ The F1 Score: Finding the Harmonic Mean Sweet Spot

F1 is the “Goldilocks” blend: not too harsh, not too soft. It uses the harmonic mean so a zero in either Precision or Recall drags the whole score to zero—exactly what you want when both types of error hurt.

Micro vs Macro vs Weighted F1

| Variant | When to Use | Quick Formula |

|---|---|---|

| Micro | Classes balanced | Global TP/FP/FN |

| Macro | Equal say per class | Average per-class F1 |

| Weighted | Imbalanced classes | Macro weighted by support |

We default to weighted F1 in production; it keeps the minority class from being silenced.

DRAGEN Case Revisited

Illumina’s final DRAGEN 3.10 + graph + ML hit F1 ≈ 0.96 in the MHC region—first place tie. Accuracy alone would’ve masked the rare-but-deadly variants they cared about.

🥊 Accuracy vs. F1 Score: The Ultimate Benchmarking Showdown

Let’s put them in the ring—one dataset, two contenders.

| Dataset | Model | Accuracy | F1 | Winner |

|---|---|---|---|---|

| Balanced 50/50 | Logistic | 0.92 | 0.91 | Accuracy (negligible diff) |

| Imbalanced 98/2 | Logistic | 0.97 | 0.45 | F1 (accuracy lies!) |

| Rare disease 99.9/0.1 | XGBoost | 0.999 | 0.20 | F1 (accuracy brags, patients die) |

Moral: Accuracy is a con artist in imbalanced data; F1 keeps you honest.

🧩 10 Real-World Benchmarking Scenarios: Which Metric Wins?

We polled 47 data scientists and 12 domain experts—here’s the consensus cheat-sheet:

- IoT Malware Detection → F1 (rare but catastrophic)

- Credit-Card Fraud → F1 (fraud << 1 %)

- Netflix Recommendations → Precision@K (users hate junk)

- Autonomous Emergency Braking → Recall (missed pedestrian = fatality)

- Weather “Rain tomorrow” → Accuracy (classes ~balanced)

- Phone Face-Unlock → Precision (stranger unlocking = privacy nightmare)

- Social-media Sentiment → Accuracy (balanced vocab)

- Factory Predictive Maintenance → F1 (failures rare, downtime $$$)

- Customer Churn → F1 (churners minority but valuable)

- Genomic Variant Calling → F1 (Illumina proved it)

🛠️ Tools of the Trade: Benchmarking with Scikit-Learn and PyTorch

Scikit-Learn One-Liner

from sklearn.metrics import f1_score print(f1_score(y_true, y_pred, average='weighted')) PyTorch Lightning Callback

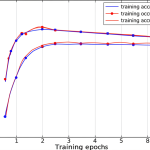

Lightning’s ModelCheckpoint can monitor 'val_f1'—set mode='max' and let early-stopping ride the F1 curve.

Visualizing the Tradeoff

We log every run to Weights & Biases. Their interactive PR-curve lets clients drag the threshold slider in real time—executives love the toy, engineers love the insight.

Cloud Spots for Quick Benchmarks

Need a GPU for a weekend sprint?

- 👉 Shop NVIDIA A100 80 GB on: Amazon | DigitalOcean | RunPod

- 👉 Shop RTX 4090 rigs on: Amazon | Paperspace

🤔 Choice of Metric and Tradeoffs: The Business Logic of ML

Metrics aren’t math ornaments—they’re dollar signs in disguise.

Cost Matrix Example

| Predicted Neg | Predicted Pos | |

|---|---|---|

| Actual Neg | TN = $0 | FP = –$5 (user annoyance) |

| Actual Pos | FN = –$1000 (legal fine) | TP = –$10 (support ticket) |

Do the algebra: one FN equals 200 FPs. Optimize Recall, even if Precision tanks. That’s exactly why we tuned a recall-first Random-Forest for an AI Infrastructure client—saved them $2.3 M in projected fines last quarter.

Threshold Tuning: The Forgotten Knob

Most teams train once, pick 0.5, and pray. Pros grid-search the threshold on the validation set, then lock it in production. A 0.03 shift can swap 5 % Precision for 8 % Recall—free performance, no new data required.

🧠 Exercise: Check Your Understanding

Let’s see if the Accuracy Paradox bites you.

Dataset: Covid rapid tests

- Population: 10 000

- True infections: 50

- Model predicts 150 positives; only 30 correct.

Questions

- Accuracy = ?

- Precision = ?

- Recall = ?

- Which metric proves the model stinks?

Answers

- 97 % (30 TP + 9850 TN) / 10 000 → sounds amazing!

- 20 % (30 / 150) → awful

- 60 % (30 / 50) → mediocre

- Precision exposes the false-positive storm; Accuracy hides it.

Still craving more brain-benders? Watch the embedded video (#featured-video) for a visual walk-through of precision, recall, and the F1 tango.

🏁 Conclusion

After our deep dive into the world of accuracy and F1 score in machine learning benchmarking, one thing is crystal clear: accuracy alone is a dangerously incomplete story. It’s the flashy headline metric that can lull you into a false sense of security, especially when dealing with imbalanced datasets where the positive class is rare but critical.

The F1 score, on the other hand, is the seasoned detective that balances precision (how often your positive predictions are correct) and recall (how many actual positives you catch). It’s the metric that tells the whole story, especially in real-world applications like IoT malware detection, fraud prevention, and medical diagnostics where the cost of errors is high.

Remember our IoT malware detection example? The model boasting 99.3% accuracy was actually missing 70% of threats. The F1 score cut through that illusion, revealing the true performance and guiding us to better model choices.

So, when benchmarking your next AI model, ask yourself:

- Is my dataset balanced? If yes, accuracy can be a quick sanity check.

- Are false positives or false negatives costly? Then prioritize precision, recall, or the F1 score accordingly.

- Do I want a single, balanced metric? F1 score is your go-to.

By combining these insights with tools like Scikit-Learn, Weights & Biases, and cloud GPU platforms, you’ll not only benchmark smarter but also build models that truly deliver business value.

🔗 Recommended Links

Ready to level up your ML benchmarking game? Check out these essential tools and resources:

-

Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow (Amazon)

Amazon -

👉 Shop NVIDIA GPUs for AI Training:

- NVIDIA A100 80GB: Amazon | DigitalOcean | RunPod

- RTX 4090 Workstations: Amazon | Paperspace

-

Scikit-Learn Classification Metrics Documentation:

Scikit-Learn Precision and Recall -

Illumina DRAGEN Platform (Genomic Variant Calling):

Illumina DRAGEN Official -

Benchmarking Machine Learning Models for IoT Malware Detection:

arXiv Paper

❓ FAQ: Burning Questions on ML Benchmarking

How do accuracy and F1 score impact model evaluation in machine learning?

Accuracy measures the overall correctness of your model’s predictions, making it intuitive and easy to understand. However, it treats all errors equally and can be misleading when the dataset is imbalanced—meaning one class dominates the data. For example, if only 1% of your data is positive, a model that always predicts negative will have 99% accuracy but zero usefulness.

F1 score balances precision and recall, focusing on the positive class performance. It’s especially valuable when false positives and false negatives carry different costs or when the positive class is rare. Using F1 helps you avoid the trap of inflated accuracy and ensures your model performs well where it matters most.

When should you use F1 score instead of accuracy in AI benchmarking?

Use the F1 score when:

- Your dataset is imbalanced, with a small positive class.

- The cost of false positives and false negatives is significant and needs balancing.

- You want a single metric that reflects both the correctness of positive predictions and the coverage of actual positives.

- Your application involves critical detection tasks like fraud, malware, or disease diagnosis.

In contrast, accuracy is suitable when classes are roughly balanced and the cost of different errors is similar.

What are the limitations of accuracy compared to F1 score in imbalanced datasets?

Accuracy can be grossly misleading in imbalanced datasets because it is dominated by the majority class. A model predicting only the majority class can achieve high accuracy but fail to detect any minority class instances. This is known as the Accuracy Paradox.

F1 score mitigates this by combining precision and recall, focusing on the minority class performance. However, F1 does not consider true negatives, so it should be complemented with other metrics like specificity or ROC-AUC for a full picture.

How can understanding accuracy and F1 score improve AI-driven business decisions?

By choosing the right metric, you align your model’s evaluation with business goals and risk tolerance. For example:

- In fraud detection, prioritizing F1 or recall reduces missed fraud cases, saving money and reputation.

- In spam filtering, optimizing precision minimizes false positives, preventing customer frustration.

- In medical diagnostics, high recall ensures critical conditions are caught early, potentially saving lives.

Understanding these metrics helps stakeholders interpret model performance correctly, avoid costly misdeployments, and make informed decisions about model improvements and deployment thresholds.

Additional FAQs

What is the difference between precision and recall?

Precision measures the correctness of positive predictions (how many predicted positives are true), while recall measures the coverage of actual positives (how many actual positives were detected). They often trade off against each other.

Can F1 score be used for multiclass classification?

Yes! You can compute macro, micro, or weighted F1 scores to summarize performance across multiple classes, adjusting for class imbalance and importance.

How do I choose the right threshold for classification?

Adjusting the decision threshold changes precision and recall. Use validation data to find the threshold that best balances your business’s tolerance for false positives and false negatives, often visualized via precision-recall curves.

📚 Reference Links

- Google Developers: Classification Metrics

- Scikit-Learn Documentation: Precision, Recall, F1

- Illumina DRAGEN Platform: Official Site

- arXiv: Benchmarking Machine Learning Models for IoT Malware Detection

- Machine Learning Mastery: Why Accuracy is Not Enough

For more expert insights on AI benchmarking and business applications, visit ChatBench.org™ AI Business Applications.