Support our educational content for free when you purchase through links on our site. Learn more

12 Essential KPIs for Evaluating NLP Models in 2025 🚀

Natural Language Processing (NLP) models have become the backbone of countless AI applications—from chatbots that understand your queries to translation engines breaking language barriers. But how do you really know if your NLP model is performing well? Spoiler alert: it’s not as simple as checking accuracy. In fact, relying on a single metric can be downright misleading.

At ChatBench.org™, we’ve dissected every angle of NLP evaluation to bring you 12 essential Key Performance Indicators (KPIs) that reveal the true strengths and weaknesses of your models. From classic metrics like Precision and Recall to cutting-edge semantic measures like BERTScore, and even efficiency and fairness indicators, this guide covers it all. Curious about why perplexity is the “gold standard” for language models or how real-world conversational AI success is measured beyond numbers? Stick around—we’ll unpack these mysteries and more with expert insights and real-world case studies.

Key Takeaways

- No one-size-fits-all KPI: Choose metrics tailored to your NLP task—classification, generation, or dialogue—to get meaningful insights.

- Balance precision and recall: Use the F1-score to harmonize false positives and false negatives, especially in imbalanced datasets.

- Perplexity is king for language models: Lower perplexity means your model predicts text more confidently and accurately.

- Semantic metrics matter: Embedding-based scores like BERTScore capture meaning beyond exact word matches.

- Efficiency and fairness are critical: Real-world success depends on speed, scalability, and unbiased performance across user groups.

- High-quality datasets are the foundation: Reliable KPIs require carefully annotated, representative evaluation data with measured inter-annotator agreement.

Ready to master NLP model evaluation and turn AI insight into your competitive edge? Let’s dive in!

Table of Contents

- ⚡️ Quick Tips and Facts on NLP Model KPIs

- 🔍 Understanding the Evolution of NLP Model Evaluation

- 📊 1. Accuracy Metrics: Measuring Correctness in NLP

- 📈 2. Perplexity and Language Model Evaluation

- 📉 3. Loss Functions: Cross-Entropy and Beyond

- 🧠 4. Semantic and Contextual Understanding Metrics

- 💬 5. Dialogue and Conversational AI KPIs

- ⚙️ 6. Efficiency and Scalability KPIs for NLP Models

- 🧪 7. Robustness and Fairness Indicators in NLP

- 📚 8. Dataset Quality and Its Impact on KPI Evaluation

- 🛠️ Best Practices for Selecting and Combining NLP KPIs

- 📊 Visualization and Reporting of NLP Model Performance

- 🤖 Real-World Case Studies: KPI Applications in NLP Projects

- 🔮 Future Trends in NLP Model Evaluation Metrics

- 📝 Conclusion: Mastering NLP KPIs for Model Success

- 🔗 Recommended Links for Deepening Your NLP KPI Knowledge

- ❓ Frequently Asked Questions About NLP Model KPIs

- 📚 Reference Links and Further Reading

Here is the main content for your article, from the “Quick Tips and Facts” section up to, but not including, the “Conclusion” section.

⚡️ Quick Tips and Facts on NLP Model KPIs

Welcome, fellow AI enthusiasts! Before we dive deep into the nitty-gritty of Natural Language Processing (NLP) model evaluation, let’s get you started with some quick, digestible takeaways. Here at ChatBench.org™, we live and breathe this stuff, so think of this as your expert cheat sheet.

- No Single Metric Rules Them All: The “best” Key Performance Indicator (KPI) depends entirely on your specific NLP task. A sentiment analysis model has different success criteria than a machine translation engine.

- Perplexity is King for Language Models: When you hear data scientists talking about how “perplexed” their model is, they’re referring to a core metric. Lower perplexity is better, as it indicates the model is more confident and accurate in its predictions. As explained in a helpful overview, a perplexity of 1 means the model is 100% confident, which is the dream! You can learn more about this in the featured video below.

- Accuracy Isn’t Always Accurate: Simple accuracy can be misleading, especially with imbalanced datasets. If you’re trying to detect a rare disease that appears in 1% of scans, a model that always says “no disease” is 99% accurate but completely useless!

- Precision vs. Recall: The Eternal Trade-off:

- Precision: Of all the positive predictions you made, how many were actually correct? (Think: minimizing false positives).

- Recall (Sensitivity): Of all the actual positives out there, how many did you find? (Think: minimizing false negatives). You’ll often have to decide which is more important for your application.

- F1-Score is Your Best Friend: The F1-score is the harmonic mean of precision and recall, giving you a single number that balances both. It’s a go-to metric for many classification tasks.

- Clinical NLP is a High-Stakes Game: In medical applications, metrics like Receiver Operating Characteristic-Area Under the Curve (ROC-AUC) and Sensitivity (Recall) are paramount. A systematic review found ROC-AUC was used in 78.3% of emergency department triage studies!

- Context is Everything: Modern metrics like BERTScore go beyond simple word matching. They use contextual embeddings to see if the meaning of the model’s output is close to the reference, even if the exact words are different.

- Don’t Forget Efficiency! A model can be incredibly accurate but useless if it’s too slow or big for your application. Inference time and model size are critical business-facing KPIs.

🔍 Understanding the Evolution of NLP Model Evaluation

Ah, the good old days. Remember when we could just count keywords? Evaluating language processing used to be a simpler affair. But just as we’ve evolved from clunky flip phones to the supercomputers in our pockets, NLP models have made a quantum leap. This journey has forced us, as researchers and engineers, to get a whole lot smarter about how we measure success.

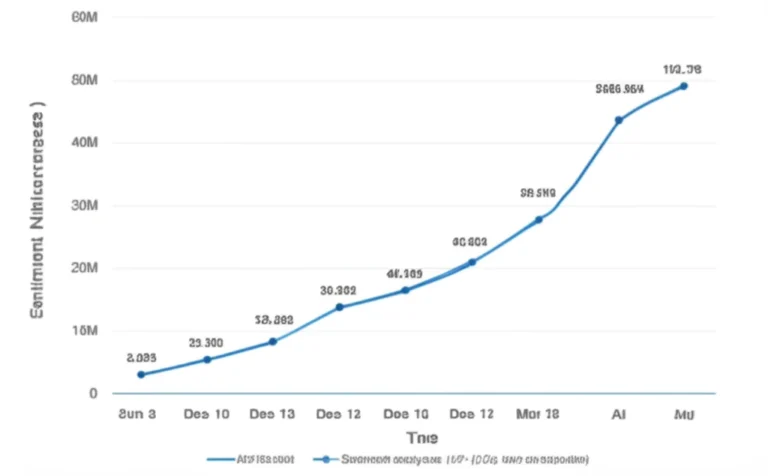

Initially, rule-based systems dominated. Evaluation was straightforward: did the system follow the rule? ✅ or ❌. Then came statistical models like n-grams. This is where metrics like Perplexity first rose to prominence, giving us a mathematical way to gauge how well a model could predict the next word.

The real revolution, however, came with deep learning and transformer architectures like the one used in Google’s BERT and OpenAI’s GPT series. These models don’t just see words; they understand context. This leap in capability demanded a new suite of evaluation tools. Simple word-overlap metrics like BLEU, while still useful, couldn’t capture the semantic richness of the text these models generated. This led to the development of more sophisticated, embedding-based metrics that can appreciate a good paraphrase when they see one.

Furthermore, as these models moved from the lab into real-world AI Business Applications, the definition of “performance” expanded. It’s no longer just about accuracy. Now we have to ask:

- Is the model fast enough for a real-time chatbot?

- Is it fair and unbiased across different user groups?

- Is it robust against weird, unexpected inputs?

This evolution brings us to the modern landscape of NLP evaluation—a rich, multi-faceted discipline that balances statistical rigor with real-world pragmatism. It’s a landscape we’ll explore in detail right now.

📊 1. Accuracy Metrics: Measuring Correctness in NLP

Let’s start with the classics. When you want to know if your model is getting things right, these are your first port of call. But beware—the devil is in the details!

Precision, Recall, and F1 Score Explained

For any classification task (like spam detection, sentiment analysis, or identifying medical conditions in text), you need to understand the four possible outcomes of a prediction. Let’s use a spam filter as an example:

| Predicted: Spam | Predicted: Not Spam | |

|---|---|---|

| Actual: Spam | True Positive (TP) | False Negative (FN) |

| Actual: Not Spam | False Positive (FP) | True Negative (TN) |

From this, we derive the holy trinity of classification metrics:

-

Precision (Positive Predictive Value): Answers the question: “Of all the emails we flagged as spam, how many were actually spam?” It’s the measure of your model’s exactness.

- Formula:

TP / (TP + FP) - High Precision is crucial when: The cost of a false positive is high. You don’t want to send an important email (like a job offer) to the spam folder!

- Formula:

-

Recall (Sensitivity or True Positive Rate): Answers the question: “Of all the actual spam emails that came in, how many did we catch?” It’s the measure of your model’s completeness.

- Formula:

TP / (TP + FN) - High Recall is crucial when: The cost of a false negative is high. In medical diagnoses, you’d rather have a false alarm (low precision) than miss a real case of disease (low recall).

- Formula:

-

F1-Score: This is the hero that balances the two. It’s the harmonic mean of Precision and Recall, providing a single score that reflects overall accuracy.

- Formula:

2 * (Precision * Recall) / (Precision + Recall) - Use the F1-Score when: You need a balance between Precision and Recall, and the class distribution is uneven. A robust evaluation methodology will always report the F1-score with a 95% confidence interval to show the range of its true value.

- Formula:

Accuracy vs. Balanced Accuracy: When to Use Which?

-

Accuracy: This is the most intuitive metric. It’s simply the ratio of correct predictions to the total number of predictions.

- Formula:

(TP + TN) / (TP + TN + FP + FN) - The Trap: As we mentioned, if 99% of your emails are not spam, a lazy model can achieve 99% accuracy by never flagging anything. This is why relying solely on accuracy is a rookie mistake in our field.

- Formula:

-

Balanced Accuracy: This metric is the average of recall obtained on each class. It’s a much better choice for imbalanced datasets because it gives equal weight to both the majority and minority classes.

- Formula:

(Recall_Class1 + Recall_Class2) / 2 - Our Recommendation: When in doubt, start with Balanced Accuracy. It will save you from being fooled by a model that’s simply ignoring the rare, but often most important, events.

- Formula:

📈 2. Perplexity and Language Model Evaluation

If you’re working with generative models like GPT-3 or Llama 2—models that need to predict the next word in a sequence—perplexity is your north star. It’s one of the most fundamental concepts covered in guides to AI benchmarks for natural language processing tasks.

So, what is it? In essence, perplexity measures how uncertain a model is when predicting the next word. Think of it as the model’s level of surprise. If it sees the phrase “The cat sat on the ___”, it should be pretty confident the next word is “mat” or “couch”. Its perplexity would be low. If the phrase was “The politician gave a ___”, the possibilities are much wider (“speech”, “statement”, “resignation”), so the perplexity would be higher.

The key takeaway is simple: a lower perplexity score indicates a better language model.

As the featured video in this article explains, perplexity gives a quantitative measure of the model’s prediction confidence. A model that has to choose between 10 equally likely words at each step has a perplexity of 10. A model that knows the exact right word every time has a perplexity of 1.

Mathematically, perplexity is the exponentiation of the cross-entropy loss. Don’t worry, we’ll break that down next. For now, just know that when you’re training a language model, that decreasing loss curve you’re watching is directly related to your perplexity getting better.

However, a word of caution from our team’s experience: you can’t always compare perplexity scores between different models directly. Why? Because perplexity is sensitive to things like:

- Vocabulary Size: A model with a larger vocabulary has more choices, which can naturally lead to higher perplexity.

- Preprocessing: Different ways of tokenizing text (e.g., word-level vs. subword-level) will result in different perplexity scores.

- Context Length: How many previous words the model considers can affect its predictions.

When comparing models, you have to ensure you’re using a standardized test set and evaluation framework, like the ones found in our LLM Benchmarks category.

📉 3. Loss Functions: Cross-Entropy and Beyond

Every time a machine learning model trains, it’s playing a game of “getting warmer.” The loss function is what tells the model if it’s getting warmer (closer to the right answer) or colder (further away). The goal of training is to minimize this loss.

For most NLP tasks, the go-to loss function is Cross-Entropy.

So, what is it? Cross-entropy measures the “distance” between two probability distributions: the model’s predicted distribution and the true distribution (the “right answer”).

Let’s imagine a simple task: predicting the next word after “the cat sat on the”.

- True Distribution: The word is “mat”. So, the probability for “mat” is 100%, and 0% for every other word in the vocabulary.

- Model’s Prediction: A good model might predict “mat” with 70% probability, “couch” with 20%, and “floor” with 10%.

Cross-entropy calculates a score that represents how different the model’s prediction is from the 100% certain truth. The closer the model’s highest probability is to the correct word, the lower the cross-entropy loss.

“When we train a language model with the objective of minimizing the cross entropy loss, the true objective is to minimize the KL divergence of the distribution, which was learned by our language model from the empirical distribution of the language.”

In simpler terms, you’re training the model to make its internal probability landscape match the reality of the language. And as we mentioned before, perplexity is just 2 raised to the power of the cross-entropy loss. They are two sides of the same coin for measuring model performance during the Fine-Tuning & Training process.

🧠 4. Semantic and Contextual Understanding Metrics

Okay, the classic metrics are great, but they have a weakness: they’re often too literal. If a human translator writes “The weather is lovely,” and your model writes “It’s a beautiful day,” traditional metrics might score it poorly because the words don’t match. But the meaning is identical!

This is where semantic metrics come in. They are designed to measure how well a model captures the underlying meaning, not just the surface-level words.

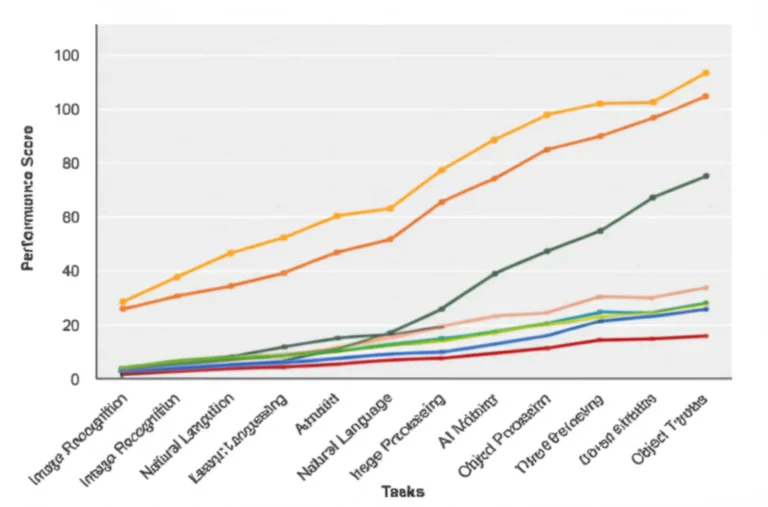

BLEU, ROUGE, and METEOR for Text Generation

These are the workhorses for evaluating machine translation and text summarization tasks.

- BLEU (Bilingual Evaluation Understudy): This is the granddaddy of translation metrics. It measures how many n-grams (sequences of 1, 2, 3, or 4 words) from the model’s generated translation overlap with a set of high-quality human reference translations. It’s precision-focused: did the words the model used appear in the reference?

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation): As the name suggests, ROUGE is recall-focused. It’s the flip side of BLEU. It checks if the n-grams from the human reference text appear in the model’s generated summary. It’s great for summarization because you want to ensure the key points from the original text were recalled by the model.

- METEOR (Metric for Evaluation of Translation with Explicit ORdering): METEOR is the sophisticated cousin of BLEU and ROUGE. It goes a step further by considering synonyms and stemmed words (e.g., “running” and “run”). It tries to align words between the generated text and the reference and calculates a score based on precision, recall, and a fragmentation penalty to reward longer, contiguous matches.

Embedding-based Metrics: BERTScore and Beyond

The real cutting edge in semantic evaluation lies with embedding-based metrics. Instead of matching words, these metrics convert both the candidate text and the reference text into dense numerical vectors (embeddings) using a powerful pre-trained model like BERT.

BERTScore then computes the cosine similarity between the embeddings of the words in each sentence. This allows it to understand that “intelligent” is a better match for “smart” than it is for “hungry,” something BLEU could never do. It’s a game-changer for evaluating nuanced text generation and is a key part of many modern Model Comparisons.

💬 5. Dialogue and Conversational AI KPIs

When you’re building a chatbot or a voice assistant, academic metrics only tell part of the story. Your users don’t care about your bot’s perplexity score; they care if it solved their problem! For these applications, we need a blend of automated metrics and human-centric KPIs.

- Task Completion Rate: Did the user successfully achieve their goal (e.g., book a flight, check an order status)? This is often the most important business metric.

- Turn-level Success: In a multi-step conversation, is the bot correctly understanding and responding at each turn?

- User Satisfaction (CSAT): The classic “How would you rate your experience?” survey at the end of a chat. It’s a direct measure of user happiness.

- Containment Rate: How many user queries were resolved by the bot without needing to escalate to a human agent? This is a key KPI for call center automation.

- F-Score (for Intent Recognition): How well does the bot understand the user’s initial intent? This is a standard classification problem where Precision, Recall, and F1-score are perfect.

At ChatBench.org™, we always advise clients to build a comprehensive dashboard that tracks both the technical performance (like intent recognition F1-score) and the business outcomes (like task completion and containment rate).

⚙️ 6. Efficiency and Scalability KPIs for NLP Models

An NLP model that gives brilliant answers but takes 30 seconds to do so is not a production-ready model. In the real world, speed and cost are features. For any serious developer, these efficiency KPIs are non-negotiable. Check out our Developer Guides for more on deploying models efficiently.

Inference Time and Latency

- Latency: How long does it take for the model to return a single prediction after receiving the input? This is critical for real-time applications like interactive chatbots. You’re typically aiming for sub-second latency.

- Throughput: How many predictions can the model handle per second? This is crucial for batch processing tasks, like analyzing millions of customer reviews overnight.

These metrics are heavily influenced by the hardware you use. Running inference on a powerful GPU from NVIDIA on a platform like Paperspace or RunPod will yield vastly different results than running on a CPU.

Model Size and Memory Footprint

- Model Size (on disk): How much storage space does the model file take up? A multi-billion parameter model like GPT-3 can be several gigabytes, making it challenging to deploy on edge devices like smartphones.

- Memory Footprint (in RAM/VRAM): How much memory is required to load and run the model? This determines the kind of hardware you need and directly impacts operational costs.

Techniques like quantization (reducing the precision of the model’s weights) and distillation (training a smaller model to mimic a larger one) are essential for shrinking models to make them more efficient without sacrificing too much accuracy.

🧪 7. Robustness and Fairness Indicators in NLP

An NLP model is only as good as its worst day. How does it behave when it encounters typos, slang, or deliberately adversarial inputs? And more importantly, does it perform equally well for people from all backgrounds?

- Robustness: This is a measure of the model’s resilience to “distribution shift”—when the data it sees in the real world is different from its training data. We test this by feeding it perturbed inputs:

- Adding typos or grammatical errors.

- Paraphrasing sentences.

- Using adversarial attacks designed to fool the model.

- Fairness and Bias: This is one of the most critical areas of modern AI research. A model trained on biased internet data can perpetuate harmful stereotypes. We evaluate fairness by measuring the model’s performance across different demographic groups (e.g., gender, race, dialect). A significant drop in performance for any one group is a major red flag.

A recent systematic review of ML models in medicine found a high risk of bias in 73% of the models analyzed, highlighting the urgent need for better evaluation practices in this area. Adopting Explainable AI (XAI) techniques can help us understand why a model makes certain predictions, which is a crucial step in identifying and mitigating bias.

📚 8. Dataset Quality and Its Impact on KPI Evaluation

Here’s a truth every ML engineer knows deep in their bones: Garbage in, garbage out. Your KPIs are meaningless if your test data is flawed. The foundation of any robust evaluation is a high-quality, manually annotated gold standard dataset.

The process, as outlined in a comprehensive methodology for clinical NLP, involves several key steps:

- Define the Target Population: Be crystal clear about the data you want to evaluate on.

- Statistical Sampling: Use a tool like SLiCE (Sample Size Calculator for Evaluations) to determine the minimum number of documents you need to annotate to achieve statistically significant results.

- Clear Annotation Guidelines: Create a detailed rulebook for your human annotators.

- Measure Inter-annotator Agreement (IAA): Have at least two experts annotate the same data independently. The level of agreement between them (often measured using an F1-score) tells you how ambiguous the task is and sets a realistic upper bound for your model’s performance. If your human experts only agree 85% of the time, you can’t expect your AI to achieve 95% accuracy!

Only by investing in a high-quality evaluation set can you truly trust the numbers your KPIs are showing you.

🛠️ Best Practices for Selecting and Combining NLP KPIs

So, how do you pull all of this together into a coherent evaluation strategy? It’s about choosing a suite of metrics that gives you a holistic view of your model.

Here’s our recommended approach at ChatBench.org™:

-

Start with Your Goal: What is the primary objective of your NLP application?

- Classification? Start with F1-score, Precision, Recall, and Balanced Accuracy. Create a confusion matrix to see where the errors are.

- Text Generation? Use Perplexity during training, and a combination of BLEU/ROUGE and a semantic metric like BERTScore for final evaluation.

- Conversational AI? Combine automated metrics (like intent F1-score) with business KPIs (like Task Completion Rate and CSAT).

-

Create a Balanced Scorecard: Don’t just track one number. Monitor a primary success metric alongside several secondary and “guardrail” metrics.

- Primary Metric: e.g., F1-Score for a sentiment model.

- Secondary Metrics: e.g., Precision and Recall broken down by sentiment class.

- Guardrail Metrics: e.g., Latency, CPU/GPU usage, and fairness scores across demographic groups. These ensure that improving your primary metric doesn’t negatively impact other important aspects.

-

Always Report Confidence Intervals: A single F1-score of 0.92 is less informative than “0.92 with a 95% confidence interval of [0.90, 0.94]”. This tells you the likely range of your model’s true performance and is a hallmark of rigorous scientific evaluation.

-

Benchmark Against Baselines: How does your fancy new transformer model compare to a simpler, older model like Logistic Regression? Sometimes, a simpler model is “good enough” and much more efficient. In clinical settings, Logistic Regression is often used as the benchmark to beat.

📊 Visualization and Reporting of NLP Model Performance

Numbers in a spreadsheet are hard to interpret. To truly understand your model’s performance, you need to visualize it.

- Confusion Matrix: For any classification task, this is non-negotiable. It’s a simple grid that shows you exactly what kinds of mistakes your model is making (e.g., confusing “neutral” sentiment with “positive”).

- ROC Curve (Receiver Operating Characteristic): This plot shows the trade-off between the True Positive Rate (Recall) and the False Positive Rate at various threshold settings. The Area Under the Curve (AUC) gives you a single number to summarize performance—the closer to 1.0, the better. ROC-AUC is a staple in medical NLP evaluation.

- Precision-Recall Curve: Similar to the ROC curve, but it plots Precision vs. Recall. This is often more informative for tasks with large class imbalances.

- Performance Dashboards: Use tools like Tableau, Power BI, or open-source options like Grafana to create live dashboards that track your key KPIs over time. This is essential for monitoring models in production.

🤖 Real-World Case Studies: KPI Applications in NLP Projects

Let’s move from theory to practice. How are these KPIs used in the wild?

Case Study: Improving Emergency Department Triage

A fascinating systematic review analyzed 60 studies using ML and NLP to improve patient triage in emergency departments—a high-stakes environment where quick, accurate decisions are critical.

- The Problem: Human-led triage can be inconsistent and biased. The goal was to use ML to predict outcomes like hospital admission, ICU admission, or mortality.

- The Data: A mix of structured data (vital signs like heart rate, blood pressure) and unstructured data (the free-text triage notes written by nurses).

- Key Finding: Adding NLP to process the triage notes significantly improved the performance of the ML models in every single study that used them. This shows the immense value locked away in unstructured text.

- The KPIs Used: The most common metrics were ROC-AUC (78.3% of studies), Sensitivity/Recall (71.6%), and Precision (58.3%). This makes perfect sense: in a medical setting, you are highly concerned with catching all critical cases (high recall) and having a model that is generally trustworthy (high ROC-AUC).

- Top Performing Models: While many models were used, XGBoost and Deep Neural Networks (DNNs) were consistently the top performers, outperforming the baseline Logistic Regression models.

This case study is a perfect example of how a carefully chosen set of KPIs can be used to validate the effectiveness of NLP in a critical real-world application.

🔮 Future Trends in NLP Model Evaluation Metrics

The world of NLP is moving at lightning speed, and our evaluation methods are racing to keep up. So, what’s next on the horizon?

- Evaluating Generative AI: How do you “score” a poem written by an AI, or a multi-turn conversation that’s helpful and empathetic? This is the million-dollar question. The trend is moving towards using powerful LLMs as evaluators themselves (e.g., asking GPT-4 to rate a response from another model on a scale of 1-10 for helpfulness).

- The Rise of Explainable AI (XAI): As models become more complex, just knowing the prediction isn’t enough. We need to know why the model made that prediction. XAI methods, which provide insights into the model’s decision-making process, are becoming a crucial part of the evaluation toolkit, especially in regulated fields like healthcare and finance.

- Human-in-the-Loop Evaluation: We’re realizing that for many nuanced tasks, automated metrics will never be enough. We’ll see more sophisticated platforms and methodologies for collecting high-quality human feedback at scale and incorporating it directly into the model evaluation and fine-tuning process.

- Standardized Benchmarking for All: While benchmarks like GLUE and SuperGLUE have been invaluable for comparing models on downstream tasks, there’s a growing need for more comprehensive benchmarks that also evaluate for robustness, fairness, and efficiency out-of-the-box.

The future of evaluation is less about a single score and more about a holistic, multi-faceted understanding of a model’s capabilities and limitations. It’s an exciting time to be in the field, and we at ChatBench.org™ are thrilled to be a part of the conversation.

📝 Conclusion: Mastering NLP KPIs for Model Success

Phew! What a journey through the fascinating world of Key Performance Indicators (KPIs) for evaluating Natural Language Processing models. From the foundational metrics like Precision, Recall, and F1-score, to the nuanced semantic measures like BERTScore, and the efficiency and fairness indicators that keep models practical and ethical — we’ve covered the full spectrum.

Here’s the bottom line from the ChatBench.org™ AI research team:

- No single KPI tells the whole story. The best evaluation strategy combines multiple metrics tailored to your specific NLP task, whether it’s classification, generation, or dialogue.

- Context matters. Metrics like perplexity are indispensable for language modeling, but they must be interpreted carefully, considering vocabulary and preprocessing differences.

- Real-world impact is king. Beyond numbers, KPIs like task completion rate and user satisfaction define success in conversational AI applications.

- Robustness and fairness are non-negotiable. Models that perform well only on clean, balanced data but fail in the wild or show bias are liabilities, not assets.

- Quality data is your foundation. Without a carefully curated and annotated evaluation dataset, your KPIs are just numbers without meaning.

- Future-proof your evaluation. Embrace explainability, human-in-the-loop feedback, and evolving benchmarks to stay ahead in this fast-moving field.

If you’re building or evaluating NLP models, take these insights to heart. Use them to design comprehensive, transparent, and meaningful evaluation pipelines that not only measure performance but also build trust and deliver real value.

🔗 Recommended Links for Deepening Your NLP KPI Knowledge

Ready to dive deeper or start experimenting with top NLP models and tools? Check out these handpicked resources and products:

-

👉 Shop NVIDIA GPUs for NLP Model Training and Inference:

-

Cloud Platforms for Scalable NLP Workloads:

-

Books on NLP and Model Evaluation:

-

Annotation Tools and Sample Size Calculators:

❓ Frequently Asked Questions About NLP Model KPIs

What metrics best measure the accuracy of natural language processing models?

Answer:

The choice depends on the task type and dataset characteristics. For classification tasks, Precision, Recall, and F1-score are the gold standards, especially when dealing with imbalanced classes. Accuracy can be misleading if the dataset is skewed. For generative language models, Perplexity and Cross-Entropy Loss are key intrinsic metrics that evaluate how well the model predicts the next token. For text generation tasks like translation or summarization, metrics such as BLEU, ROUGE, and METEOR provide insight into word overlap, while embedding-based metrics like BERTScore assess semantic similarity. Always consider multiple metrics to get a holistic view.

How do precision and recall impact the evaluation of NLP systems?

Answer:

Precision and recall represent two sides of the error trade-off. Precision measures how many of the positive predictions are correct, minimizing false positives, which is crucial in applications where false alarms are costly (e.g., spam filters). Recall measures how many actual positives are detected, minimizing false negatives, which is vital in safety-critical domains like medical diagnosis. Balancing these metrics is essential because optimizing for one often harms the other. The F1-score harmonizes precision and recall into a single metric that balances both concerns.

What role does F1 score play in assessing natural language processing performance?

Answer:

The F1-score is the harmonic mean of precision and recall, providing a single, interpretable number that balances the trade-off between false positives and false negatives. It is especially useful when classes are imbalanced or when both types of errors carry significant consequences. Reporting the F1-score alongside confidence intervals gives a statistically robust picture of model performance. In many clinical NLP evaluations, the F1-score is used as a benchmark to compare models and human annotator agreement.

How can model interpretability improve competitive advantage in AI-driven NLP solutions?

Answer:

Interpretability, often achieved through Explainable AI (XAI) techniques, allows stakeholders to understand why a model makes certain predictions. This transparency builds trust, especially in regulated industries like healthcare and finance, where decisions must be auditable. Interpretability helps identify biases and errors, enabling developers to improve model fairness and robustness. From a business perspective, explainable models reduce risk, facilitate compliance, and improve user acceptance—key factors that translate into a competitive edge in deploying AI solutions.

How do dataset quality and annotation impact NLP model evaluation?

Answer:

High-quality, well-annotated datasets are the backbone of reliable NLP model evaluation. Poor annotations or unrepresentative samples can skew KPIs, leading to over- or under-estimation of model performance. Measuring Inter-Annotator Agreement (IAA) ensures that the task is well-defined and that human labels are consistent, setting an upper bound for model performance. Tools like SLiCE help determine statistically robust sample sizes for evaluation, ensuring confidence in reported metrics.

Why is efficiency important alongside accuracy in NLP model KPIs?

Answer:

In real-world applications, a model that is accurate but slow or resource-intensive can be impractical. KPIs like inference latency, throughput, and memory footprint directly affect user experience and operational costs. Efficient models enable deployment on edge devices, reduce cloud compute expenses, and support real-time applications. Balancing accuracy with efficiency is critical for scalable, cost-effective AI solutions.

📚 Reference Links and Further Reading

- Understanding Evaluation Metrics for Language Modeling — The Gradient

- Systematic Review on NLP and ML in Emergency Department Triage — BMC Emergency Medicine

- Robust and Representative Evaluations of Clinical NLP Systems — PMC

- Google BERT Research Team

- OpenAI GPT Models

- NVIDIA Data Center GPUs

- Inception Annotation Tool

- SLiCE Sample Size Calculator

- Amazon Books on NLP and Deep Learning

We hope this comprehensive guide empowers you to evaluate NLP models like a pro and turn AI insight into your competitive edge! 🚀