Support our educational content for free when you purchase through links on our site. Learn more

How Often Are AI Benchmarks for NLP Updated? 🤖 (2025)

Ever wondered how often those AI benchmarks that measure natural language processing (NLP) models get updated? Spoiler alert: it’s not on a neat, predictable schedule. Instead, the update cycle is a dynamic dance driven by breakthroughs, community needs, and the relentless pace of AI innovation. From the early days when benchmarks lasted years, to today’s whirlwind where new challenges emerge almost overnight, understanding this rhythm is crucial for anyone working with or relying on NLP technologies.

In this article, we’ll unpack the fascinating lifecycle of NLP benchmarks—why they get retired, how new ones are born, and what it means for AI developers and businesses alike. Curious about how the rise of large language models like GPT-4 has shaken up the benchmarking world? Or how fairness, efficiency, and real-world relevance are reshaping evaluation standards? Stick around, because we’re diving deep into all that and more with insights from our expert team at ChatBench.org™.

Key Takeaways

- NLP benchmarks are updated organically, not on fixed schedules, driven by model saturation and new capabilities.

- Breakthroughs like Transformer architectures and LLMs accelerate the need for tougher, more comprehensive benchmarks.

- Modern benchmarks evaluate beyond accuracy, including fairness, robustness, interpretability, and efficiency.

- Community-driven platforms and industry giants both play vital roles in evolving benchmarks.

- Choosing the right benchmark strategically is essential for meaningful AI evaluation and deployment.

Table of Contents

- ⚡️ Quick Tips and Facts

- 🕰️ The Ever-Evolving Landscape of NLP: A Historical Perspective on Benchmarking

- 🤔 What Exactly Are NLP Benchmarks, and Why Do They Matter So Much?

- 🚀 The Relentless Pace of Progress: Why NLP Benchmarks Can’t Stand Still

- 🔄 Decoding the “Update Cycle”: How Frequently Do NLP Benchmarks Get a Refresh?

- 💥 The Great Benchmark Reset: When SOTA Models Break the Mold

- 🚧 Navigating the Labyrinth: Challenges in Maintaining Relevant NLP Benchmarks

- 🌐 Key Players and Platforms Driving NLP Benchmark Evolution

- Academic Institutions and Research Labs: The Foundation

- Industry Giants (Google, OpenAI, Meta, Microsoft): Pushing Boundaries

- Open-Source Communities and Platforms (Hugging Face, Papers With Code): Democratizing Benchmarking

- Dedicated Benchmark Initiatives (GLUE, SuperGLUE, MMLU): Standardizing Evaluation

- 🔬 Beyond Accuracy: Emerging Trends in NLP Evaluation

- 💡 Our ChatBench.org™ Perspective: Practical Advice for Researchers and Developers

- ✅ Conclusion: The Unending Quest for Better NLP Benchmarks

- 🔗 Recommended Links

- ❓ FAQ: Your Burning Questions About NLP Benchmarking Answered

- 📚 Reference Links

Hey there, fellow AI enthusiasts! It’s the team from ChatBench.org™, your friendly neighborhood AI researchers and machine-learning engineers. We live and breathe this stuff, turning complex AI insights into a competitive edge for you. Today, we’re tackling a question that’s buzzing in the NLP community: How often are AI benchmarks for natural language processing updated?

If you’re expecting a simple “every six months” or “annually,” you’re in for a wild ride! The real answer is far more chaotic, exciting, and frankly, more interesting. It’s less like a scheduled software update and more like an evolutionary arms race. Let’s dive in!

⚡️ Quick Tips and Facts

In a hurry? Here’s the lowdown on NLP benchmark updates:

- No Fixed Schedule: Benchmarks aren’t updated on a calendar. They evolve or are replaced when the AI community deems them “solved” or no longer challenging for the latest models.

- “Solved” is a Loaded Term: When a model surpasses the “human baseline” on a benchmark, it’s often called “saturated.” This doesn’t mean the AI has human-level understanding; it often means it has gotten really good at exploiting the specific patterns of that test.

- Driven by Breakthroughs: Major architectural shifts, like the invention of the Transformer model, are the biggest catalysts for creating new, harder benchmarks.

- Community is Key: The update cycle is largely driven by the collective efforts of academic institutions, major tech companies like Google and OpenAI, and open-source communities like Hugging Face.

- It’s Accelerating: Historically, it took decades for AI to reach human performance on benchmarks. Now, as seen with benchmarks like GLUE, it can happen in just a few years, or even months.

- Looking for the basics? Check out our comprehensive guide on What are the most widely used AI benchmarks for natural language processing tasks? to get up to speed.

🕰️ The Ever-Evolving Landscape of NLP: A Historical Perspective on Benchmarking

To understand how often benchmarks are updated, you first need to appreciate how ridiculously fast the field of NLP is moving. Think of it like this: in the early days, NLP progress was like a leisurely stroll in the park. Benchmarks were created and stood as the gold standard for years, sometimes decades.

Then, the game changed. As the “first YouTube video” embedded in this article mentions, we’re in a golden age of rapid breakthroughs. We went from models struggling with basic grammar to AI assistants on our phones and AI champions on Jeopardy. This acceleration put immense pressure on our evaluation tools.

A prime example is the GLUE (General Language Understanding Evaluation) benchmark, introduced in 2018. It was a fantastic collection of nine different tasks designed to be a one-stop-shop for measuring a model’s general language smarts. For a moment, it was the Mount Everest of NLP. But then, models like Google’s BERT and its successors came along and, within a startlingly short time, began to outperform the non-expert human baseline.

This rapid saturation is a modern phenomenon. What used to take ages now happens in the blink of an eye. This forced the community’s hand, leading to the creation of SuperGLUE in 2019—a “stickier” benchmark with more difficult tasks designed to be a tougher challenge. And guess what? The top models have now surpassed the human baseline on SuperGLUE, too. The treadmill just keeps getting faster!

🤔 What Exactly Are NLP Benchmarks, and Why Do They Matter So Much?

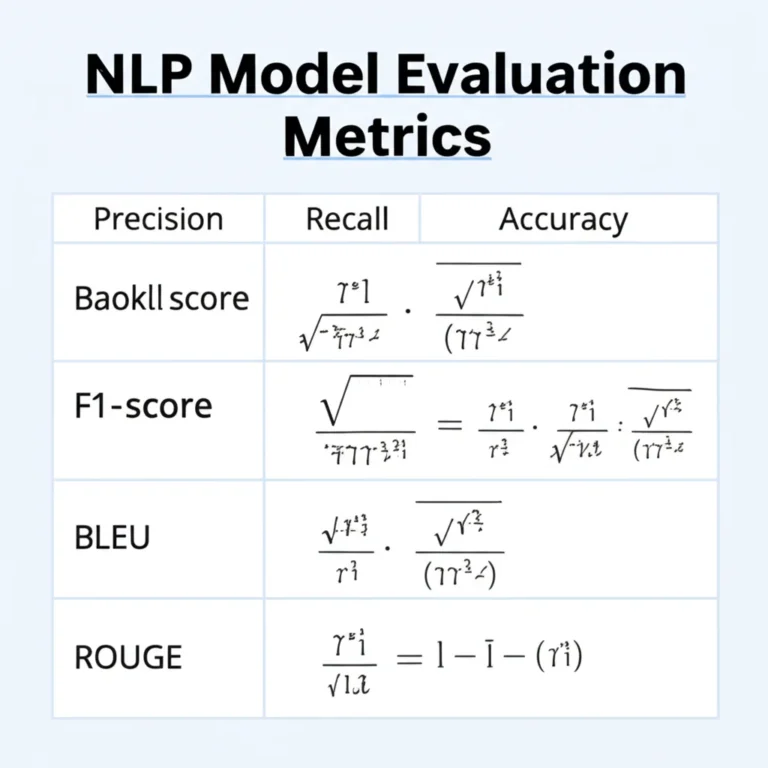

So, what are these “benchmarks” we keep talking about? At their core, benchmarks are standardized tests for AI models. They consist of a dataset (like a collection of questions, sentences, or documents) and a specific metric (like accuracy) to score a model’s performance. Think of them as the SATs for AI.

They are absolutely critical for a few reasons:

- Measuring Progress: They provide a standardized ruler to measure whether new models are actually better than the old ones. Without them, we’d be lost in a sea of marketing claims.

- Driving Competition: Public leaderboards, like those on Papers With Code or the Hugging Face Open LLM Leaderboard, create a competitive environment that spurs innovation.

- Identifying Weaknesses: When a model fails on a specific benchmark task, it gives us researchers valuable clues about where its understanding is brittle and what we need to work on next.

However, it’s not all roses. As one Harvard Medical School article points out, just “chasing leaderboards leads to the development of LLMs that are really good at answering questions found in the benchmark exams, but it’s not clear how this kind of performance relates to clinical use.” This is a crucial point: excelling at a benchmark is not the same as true understanding or real-world utility.

🚀 The Relentless Pace of Progress: Why NLP Benchmarks Can’t Stand Still

Why can’t we just create one perfect benchmark and stick with it? Because the technology it’s meant to measure is a moving target. The primary driver of benchmark updates is the relentless, jaw-dropping progress in AI model architecture.

The introduction of Transformer models, detailed in the seminal paper “Attention Is All You Need,” was a seismic shift. Models like BERT, GPT (from OpenAI), and T5 (from Google) didn’t just inch the needle forward; they broke the entire speedometer. These models could process language with a level of contextual awareness that was previously unimaginable.

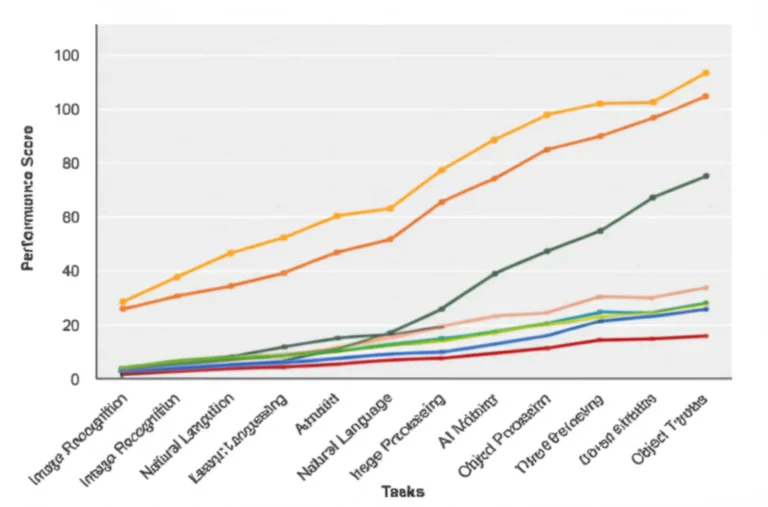

As a result, they started “saturating” existing benchmarks, meaning they achieved scores so high that the tests were no longer effective at distinguishing between good and great models. This is the core reason benchmarks must constantly be retired and replaced with more challenging ones. It’s a direct reflection of the incredible advances happening in the field.

🔄 Decoding the “Update Cycle”: How Frequently Do NLP Benchmarks Get a Refresh?

Alright, let’s get to the heart of the matter. There is no formal committee that decides, “It’s time to update our NLP benchmarks!” Instead, the “update cycle” is an organic, emergent property of the research community. It’s a dynamic process influenced by several key factors.

Factors Influencing Benchmark Update Frequency

- Model Saturation: This is the big one. When top models consistently hit near-perfect scores or surpass human performance, the benchmark loses its utility. It’s like giving a calculus exam to a toddler—it doesn’t tell you anything useful.

- Discovery of “Shortcuts”: Researchers often find that models aren’t “understanding” the task but are instead using “superficial strategies” or exploiting biases in the dataset to get the right answer. The featured video above gives a great example of this, where models can be easily fooled by adversarial examples. This discovery often invalidates the benchmark’s claims of measuring true intelligence and prompts the creation of more robust tests.

- Emergence of New Capabilities: As models get more powerful, they can perform tasks that weren’t even conceivable a few years ago. This leads to the creation of entirely new benchmarks to measure these new skills, like complex, multi-step reasoning.

- Community Consensus: A new benchmark gains traction when a critical mass of researchers and developers in the community agrees that it’s a meaningful and challenging test. This is often solidified when major AI labs start reporting their results on it.

The Role of New Datasets and Evaluation Metrics

An “update” rarely means tweaking an old benchmark. More often, it means the introduction of a completely new one. The MMLU (Massive Multitask Language Understanding) benchmark is a perfect example. Released in 2020, it was specifically designed to be more challenging than predecessors like GLUE. It covers 57 diverse subjects, from law and history to computer science, testing a model’s broad knowledge and problem-solving skills. When it was released, most models scored near random chance (25%), showing just how much harder it was.

Community-Driven Updates vs. Centralized Initiatives

The evolution of benchmarks happens on two parallel tracks:

- ✅ Centralized Initiatives: These are formally proposed benchmarks like GLUE and SuperGLUE, often created by a consortium of academic and industry researchers. They come with a specific set of tasks, a leaderboard, and a paper explaining their methodology.

- ✅ Community-Driven Platforms: This is a more decentralized approach, best exemplified by the Hugging Face Hub. Here, anyone can upload a dataset or create a leaderboard for a new task. This allows for a more rapid and chaotic—but also more diverse and innovative—evolution of evaluation methods.

💥 The Great Benchmark Reset: When SOTA Models Break the Mold

Every so often, a new model or architecture comes along that is so powerful it doesn’t just saturate a benchmark; it fundamentally changes how we think about evaluation. This forces a “great reset” in the benchmarking world.

The Rise of Large Language Models (LLMs) and Their Benchmark Impact

The modern era of Large Language Models (LLMs) like OpenAI’s GPT-4, Google’s Gemini, and Meta’s Llama series has been the single biggest catalyst for this reset. These models, with their vast scale and emergent capabilities, blew past the challenges posed by benchmarks like SuperGLUE.

Their success highlighted that older benchmarks, while useful, were too narrow. They didn’t effectively test for things like:

- Long-form coherence and reasoning.

- Factual accuracy and the tendency to “hallucinate.”

- Safety, bias, and alignment with human values.

- Real-world, multi-step problem-solving.

This has led to a flurry of new, more holistic evaluation frameworks and benchmarks designed specifically for the LLM era, such as MMLU and Big-Bench. You can explore more of these on our LLM Benchmarks category page.

The Challenge of “Benchmark Saturation” and Its Implications

Benchmark saturation is the term for when AI models reach or exceed the maximum possible score or human-level performance on a test. While it sounds like a victory, it’s actually a problem for researchers because the benchmark is no longer a useful tool for differentiating better models.

This doesn’t mean the AI has “solved” language. It often means the AI has become exceptionally good at test-taking for that specific test. As the featured video highlights, these models can still exhibit “catastrophic failure” on related tasks and are vulnerable to adversarial attacks, proving they lack deep, generalizable understanding. The illusion of understanding is a constant trap we must avoid.

🚧 Navigating the Labyrinth: Challenges in Maintaining Relevant NLP Benchmarks

Keeping benchmarks relevant is a Herculean task, fraught with challenges that go far beyond just making the questions harder.

Dataset Drift and Real-World Relevance: Keeping Benchmarks Fresh

Language is not static. It evolves with culture, current events, and new slang. A dataset created in 2018 might not reflect the language used on the internet today. This phenomenon is called dataset drift, where the statistical properties of the data a model sees in the real world change over time, diverging from the data it was trained on. A benchmark based on a static dataset will inevitably become less relevant as the world changes. This is a huge challenge for creating long-lasting, meaningful evaluations.

The Cost and Effort of Benchmark Creation and Maintenance

Let’s be real: creating a high-quality benchmark is incredibly expensive and time-consuming. It requires:

- Expert Curation: Designing tasks that are both challenging and meaningful.

- Data Collection: Gathering or creating massive amounts of high-quality data.

- Human Annotation: Paying thousands of human annotators to create the “gold standard” labels, which is often the biggest bottleneck.

- Infrastructure: Hosting the data, running the leaderboard, and maintaining the submission system.

This is why many of the most influential benchmarks come from large, well-funded academic labs or major tech corporations.

Ensuring Fairness and Mitigating Bias in NLP Benchmarking

This is one of the most critical challenges we face. AI models are known to learn and even amplify the societal biases present in their training data. An article from the NCBI highlights the critical need to train AI on diverse datasets to ensure fair outcomes. If our benchmarks don’t explicitly test for fairness and bias across different demographic groups, we risk creating models that are harmful and inequitable. Modern benchmark design is increasingly focused on including metrics for fairness, toxicity, and stereotype detection.

The Reproducibility Crisis in AI Research: A Benchmark Perspective

There’s a growing concern in the AI community known as the “reproducibility crisis.” Researchers have found it surprisingly difficult to reproduce the results reported in many AI papers. Benchmarks are supposed to be the antidote to this, providing a standard, objective way to verify claims.

However, for this to work, everything must be transparent: the exact dataset version, the model code, the hyperparameters, and the evaluation script. Even small, undocumented details can lead to wildly different results. This is why there’s a huge push for open-sourcing code and models, a philosophy championed by platforms like Hugging Face and a key focus of our Developer Guides.

🌐 Key Players and Platforms Driving NLP Benchmark Evolution

The evolution of NLP benchmarks isn’t the work of a single entity. It’s a vibrant ecosystem of collaborating (and competing) players.

Academic Institutions and Research Labs: The Foundation

Universities like Stanford (home of the original GLUE benchmark), NYU, Carnegie Mellon, and the Allen Institute for AI (AI2) have historically been the bedrock of benchmark development. Their research lays the theoretical groundwork and produces many of the foundational datasets.

Industry Giants (Google, OpenAI, Meta, Microsoft): Pushing Boundaries

The big tech labs have the massive computational resources and engineering talent to build and train the state-of-the-art models that break existing benchmarks.

- Google AI: Pioneers of the Transformer, BERT, and T5 models.

- OpenAI: Creators of the GPT series and major contributors to scaling laws research.

- Meta AI: Known for open-sourcing powerful models like Llama and contributing to reproducibility.

- Microsoft Research: A long-standing powerhouse in AI research, contributing across all areas of NLP.

Their work directly fuels the need for the “next generation” of harder benchmarks.

Open-Source Communities and Platforms (Hugging Face, Papers With Code): Democratizing Benchmarking

These platforms are the connective tissue of the modern AI ecosystem.

- Hugging Face: More than just a model repository, their Hub includes thousands of datasets and leaderboards, making it easy for anyone to participate in benchmarking. They provide tools like the

evaluatelibrary to standardize metric calculation. - Papers With Code: A fantastic resource that links research papers to the code and datasets used, complete with leaderboards for hundreds of benchmarks. It’s an essential tool for tracking the state of the art.

Dedicated Benchmark Initiatives (GLUE, SuperGLUE, MMLU): Standardizing Evaluation

These are the “brand name” benchmarks that serve as focal points for the community.

- GLUE & SuperGLUE: The classics that defined an era of NLP evaluation, pushing for general-purpose models.

- MMLU: A more modern benchmark designed to test the broad, multi-task knowledge of today’s massive LLMs.

- HELM: Stanford’s Holistic Evaluation of Language Models is an ambitious effort to create a more comprehensive benchmark, evaluating models on many more dimensions than just accuracy, including fairness, robustness, and efficiency.

🔬 Beyond Accuracy: Emerging Trends in NLP Evaluation

The community is realizing that a single accuracy score is a woefully incomplete picture of a model’s capabilities. The future of benchmarking is about moving to a more holistic and nuanced evaluation.

Evaluating Robustness and Generalization: Beyond the Training Data

How does a model perform when the input is slightly different from what it’s seen before? Robustness evaluation tests a model’s stability against small perturbations or adversarial examples. Studies have shown that even top-performing LLMs can see their accuracy drop significantly when questions are simply paraphrased, raising concerns about their true generalization abilities. A model isn’t truly intelligent if it’s easily tricked.

Assessing Interpretability and Explainability: Understanding the “Why”

As AI models are used in high-stakes domains like medicine and finance, we need to understand why they make the decisions they do. This is the field of Explainable AI (XAI). Techniques like LIME and SHAP help us peek inside the “black box” to see which words or phrases most influenced a model’s output. Future benchmarks will increasingly incorporate metrics that measure how interpretable a model’s reasoning is.

Measuring Efficiency and Resource Consumption: Green AI Benchmarking

Training massive LLMs consumes an enormous amount of energy, contributing to a significant carbon footprint. The “Green AI” movement advocates for a shift in focus, where efficiency is considered a primary evaluation metric alongside accuracy. This means rewarding models that achieve high performance with less computational cost. Benchmarking platforms are starting to track metrics like model size, training time, and energy consumption.

Human-in-the-Loop Evaluation: The Ultimate Reality Check

For many complex, subjective tasks (like evaluating the quality of a generated story or the helpfulness of a chatbot’s response), automated metrics just don’t cut it. Human-in-the-Loop (HITL) evaluation, where human experts rate model outputs, is becoming the gold standard for these scenarios. As the Harvard article powerfully argues, collaboration between clinicians and computer scientists is essential for creating benchmarks that reflect real clinical utility. Platforms like Label Studio are making it easier to manage these complex human evaluation workflows.

💡 Our ChatBench.org™ Perspective: Practical Advice for Researchers and Developers

So, what does this all mean for you, the person building or using these models? Here’s our take from the trenches at ChatBench.org™.

Choosing the Right Benchmarks for Your Project: A Strategic Approach

Don’t just chase the top score on the most famous leaderboard.

- Define Your Goal: What is the specific task you need the model to do? Is it sentiment analysis, document summarization, or a complex chatbot for a specific industry? Your goal dictates the benchmark.

- Go Beyond Accuracy: Look at benchmarks that measure robustness, fairness, and efficiency. A slightly less accurate model that is more reliable and less biased might be a much better choice for your AI Business Applications.

- Use a Battery of Tests: Never rely on a single benchmark score. Test your chosen models on a suite of relevant benchmarks to get a more complete picture of their strengths and weaknesses. Our Model Comparisons section often explores this multi-faceted approach.

- Create Your Own “Private” Benchmark: The best benchmark is one that uses data from your own specific use case. Create a small, high-quality test set that reflects the real-world challenges your model will face.

Contributing to Benchmark Development: Be Part of the Solution

The field needs more diverse and challenging benchmarks. If you’ve identified a weakness in current evaluation methods or have a unique dataset, consider turning it into a public benchmark!

- Publish Your Data: Use platforms like the Hugging Face Hub to share your dataset with the community.

- Write a “Datasheet for Datasets”: Document your data creation process, its limitations, and its intended use to promote responsible usage.

- Engage in Workshops: Conferences like NeurIPS, ICML, and ACL often have workshops dedicated to new datasets and benchmarks.

Staying Ahead of the Curve in NLP Evaluation: Continuous Learning

The state of the art in NLP evaluation is constantly changing. What’s considered a tough benchmark today might be obsolete tomorrow.

- Follow Key People and Labs: Keep an eye on the publications and blog posts from the major AI labs and academic institutions mentioned above.

- Read the Papers: When a new model tops a leaderboard, read the paper to understand the techniques they used. This is a core part of our process in Fine-Tuning & Training.

- Experiment: The best way to understand the limitations of a benchmark is to use it. Get your hands dirty, run the evaluations, and see where the models fail.

✅ Conclusion: The Unending Quest for Better NLP Benchmarks

Phew! What a journey through the fascinating, fast-paced world of NLP benchmarking. To wrap it up, AI benchmarks for natural language processing are updated not on a fixed schedule but in response to the rapid advances in model capabilities and the evolving needs of the community. When a benchmark becomes “too easy” or reveals exploitable shortcuts, researchers rally to design new, more challenging tests that better reflect real-world complexities.

This dynamic cycle is driven by breakthroughs like Transformer architectures and the rise of Large Language Models, which continually push the boundaries of what AI can do. But as we’ve seen, excelling at benchmarks is only part of the story—true progress requires benchmarks that measure robustness, fairness, interpretability, and efficiency, not just raw accuracy.

From our perspective at ChatBench.org™, the key takeaway is to choose benchmarks strategically, contribute to their evolution, and stay curious and critical about what those numbers really mean. Whether you’re a researcher, developer, or business leader, understanding the nuances behind benchmark updates will help you make smarter decisions and maintain a competitive edge in AI.

And remember: benchmarks are tools, not oracles. They guide us, but the real test is how well AI models perform in the messy, unpredictable real world.

🔗 Recommended Links

Ready to explore or shop some of the tools and resources we mentioned? Here are some handy links to get you started:

- Google AI Research: Google AI

- OpenAI: OpenAI Official

- Meta AI: Meta AI

- Microsoft Research: Microsoft Research

- Hugging Face Hub: Hugging Face

- Papers With Code: Papers With Code

- Label Studio (Human-in-the-Loop Annotation): Label Studio

Books to deepen your NLP and AI benchmarking knowledge:

- “Natural Language Processing with Transformers” by Lewis Tunstall, Leandro von Werra, and Thomas Wolf — Amazon Link

- “Deep Learning” by Ian Goodfellow, Yoshua Bengio, and Aaron Courville — Amazon Link

- “Artificial Intelligence: A Modern Approach” by Stuart Russell and Peter Norvig — Amazon Link

❓ FAQ: Your Burning Questions About NLP Benchmarking Answered

What are the most popular AI benchmarks used for natural language processing?

The most widely recognized NLP benchmarks include:

- GLUE and SuperGLUE: Designed to evaluate general language understanding across multiple tasks like sentiment analysis, question answering, and textual entailment.

- MMLU (Massive Multitask Language Understanding): Tests broad knowledge across 57 subjects, challenging modern LLMs.

- SQuAD (Stanford Question Answering Dataset): Focuses on reading comprehension and question answering.

- CoQA and QuAC: Conversational question answering benchmarks.

- HELM (Holistic Evaluation of Language Models): A newer benchmark that evaluates models on multiple dimensions beyond accuracy, including fairness and robustness.

These benchmarks have become standard tools for researchers and developers to compare model performance and track progress.

How do updates in NLP benchmarks impact AI model development?

Benchmark updates have a profound influence on AI development:

- Driving Innovation: When a benchmark is saturated, it signals researchers to develop new architectures or training methods to tackle more complex challenges.

- Shaping Research Focus: Benchmarks highlight specific tasks or capabilities, guiding where resources and attention are allocated.

- Avoiding Overfitting: Updated benchmarks help prevent models from overfitting to outdated datasets or exploiting dataset biases.

- Encouraging Robustness and Fairness: Newer benchmarks often include tests for bias, interpretability, and efficiency, pushing models to improve holistically.

In essence, benchmarks act as both a compass and a motivator for the AI community.

Who decides when and how NLP benchmarks are updated?

There is no single authority. Instead, benchmark updates emerge from:

- Academic and Industry Research Labs: Groups like Stanford, AI2, Google, OpenAI, and Meta often propose new benchmarks.

- Open-Source Communities: Platforms like Hugging Face and Papers With Code facilitate community-driven benchmark creation and adoption.

- Consensus in the Research Community: A benchmark gains traction when enough researchers adopt it and publish results on it.

- Practical Needs: Sometimes, new benchmarks arise to address gaps in existing evaluations or to test emerging model capabilities.

This decentralized, collaborative process ensures benchmarks remain relevant and challenging.

What role do AI benchmarks play in gaining a competitive edge in business?

Benchmarks are crucial for businesses leveraging AI because:

- Objective Evaluation: They provide a standardized way to assess and compare AI models, helping businesses pick the best fit for their needs.

- Risk Mitigation: By understanding a model’s strengths and weaknesses via benchmarks, companies can avoid deploying models that might fail in critical scenarios.

- Innovation Tracking: Staying informed about benchmark results helps businesses anticipate technological shifts and adapt strategies accordingly.

- Customer Trust: Demonstrating that AI solutions meet or exceed benchmark standards can build credibility with clients and stakeholders.

In short, benchmarks help businesses make informed, confident AI investments that translate into real-world value.

📚 Reference Links

- IBM: What is Natural Language Processing (NLP)?

- Harvard Magazine: What Will It Take to Translate AI Research into Clinical Advances?

- PMC: The Role of AI in Hospitals and Clinics: Transforming Healthcare

- Google AI Research

- OpenAI

- Meta AI

- Microsoft Research

- Hugging Face

- Papers With Code

- Label Studio

Thanks for sticking with us! If you want to keep leveling up your AI game, be sure to explore our LLM Benchmarks, Model Comparisons, and Developer Guides for the freshest insights and hands-on tips. 🚀