Support our educational content for free when you purchase through links on our site. Learn more

How AI Benchmarks Unlock Model Efficiency in 2025 ⚡️

Ever wondered how AI experts separate the truly efficient models from the flashy but impractical ones? AI benchmarks are the secret sauce behind this magic. They don’t just measure accuracy—they reveal how fast, resource-friendly, and sustainable a model really is. In this article, we’ll unravel the full story of how AI benchmarks help evaluate efficiency, from the classic MLPerf tests to custom benchmarks tailored for your unique needs. Plus, we’ll share insider tips from our ChatBench.org™ team on interpreting results and avoiding common pitfalls.

Here’s a teaser: Did you know that a smaller, less glamorous AI model once outperformed a state-of-the-art giant on a real client’s data—simply because it was better suited and more efficient? Stick around to discover why context and custom benchmarks often trump leaderboard glory.

Key Takeaways

- AI benchmarks provide objective, standardized metrics that go beyond accuracy to include speed, memory use, and energy consumption.

- Efficiency is a multi-dimensional trade-off—balancing latency, throughput, computational cost, and sustainability.

- Popular benchmarks like MLPerf, GLUE, ImageNet, and Hugging Face leaderboards set industry standards but may not reflect your specific use case.

- Custom benchmarks tailored to your data and goals are critical for selecting the most efficient AI model in practice.

- The future of benchmarking includes ethical fairness audits and green AI metrics to promote trustworthy and sustainable AI development.

- Our ChatBench.org™ experts recommend a holistic, continuous benchmarking approach to maximize real-world AI efficiency and impact.

Ready to turn AI benchmarking insight into your competitive edge? Let’s dive in!

Table of Contents

- ⚡️ Quick Tips and Facts

- The Genesis of AI Evaluation: A Brief History of Benchmarking Artificial Intelligence Models

- Unpacking the “Why”: The Crucial Role of AI Benchmarks in Model Efficiency and Performance

- The Anatomy of a Benchmark: Key Components and Considerations for Robust AI Evaluation

- Navigating the Labyrinth: Popular AI Benchmarking Suites and Platforms for Machine Learning Evaluation

- 1. MLPerf: The Gold Standard for AI Performance and Speed Testing

- 2. GLUE & SuperGLUE: NLP’s Toughest Tests for Language Model Efficiency

- 3. ImageNet & COCO: Visionary Benchmarks for Computer Vision Model Evaluation

- 4. Hugging Face Benchmarks: Community-Driven Evaluation for Transformers and Beyond

- 5. Custom Benchmarks: When Off-the-Shelf Won’t Cut It for Your Specific AI Use Case

- The Art of Interpretation: Decoding Benchmark Results for Real-World AI Impact and Scalability

- Beyond the Hype: The Future of AI Benchmarking, Ethical Considerations, and Green AI

- Our ChatBench.org™ Perspective: Real-World Anecdotes and Expert Recommendations for AI Model Efficiency

- Conclusion: The Unsung Heroes of AI Progress and Innovation

- Recommended Links: Dive Deeper into AI Evaluation and Benchmarking

- FAQ: Your Burning Questions About AI Benchmarks Answered

- Reference Links: Our Sources and Further Reading on AI Model Efficiency

Hello from your friendly neighborhood AI experts at ChatBench.org™! We live and breathe machine learning, and if there’s one thing that gets our circuits buzzing, it’s talking about what separates a truly efficient AI model from a glorified calculator. You’ve probably heard the hype, seen the leaderboards, and wondered, “What do all these numbers really mean?”

Well, grab your favorite caffeinated beverage, because we’re about to pull back the curtain. How do AI benchmarks help in evaluating the efficiency of AI models? It’s not just about bragging rights; it’s the very bedrock of progress in the AI world. We’ll dive into how these standardized tests act as the ultimate proving ground, pushing models to be not just smarter, but faster, leaner, and more powerful. And yes, we’ll share some of our own war stories along the way!

⚡️ Quick Tips and Facts

Before we get into the nitty-gritty, here are some key takeaways to get you started. Think of this as your cheat sheet to understanding the world of AI model evaluation.

| Quick Fact 📝 | The Lowdown 👇 – |

| What’s a Benchmark? | An AI benchmark is a standardized test to measure and compare the performance of AI models. It’s like an SAT for algorithms, providing a common dataset and metric to see who’s top of the class. – |

| Efficiency Isn’t Just Speed | While speed (latency and throughput) is key, efficiency also covers computational cost, memory usage, and energy consumption. A “fast” model that requires a supercomputer isn’t efficient for your phone! – |

| TOPS: A Key Metric | TOPS (Trillions of Operations Per Second) is a standard metric for measuring the raw computational power of AI hardware like NPUs (Neural Processing Units). It helps compare the potential performance of different chips. – |

| Green AI is the Future ♻️ | The environmental impact of AI is a growing concern. Benchmarks like MLPerf Power are now measuring energy efficiency, pushing the industry towards more sustainable AI. – |

| Context is Everything | A top score on a benchmark doesn’t automatically mean a model is best for your specific needs. Always consider your unique use case, data, and business goals when evaluating results. – |

The Genesis of AI Evaluation: A Brief History of Benchmarking Artificial Intelligence Models 📜

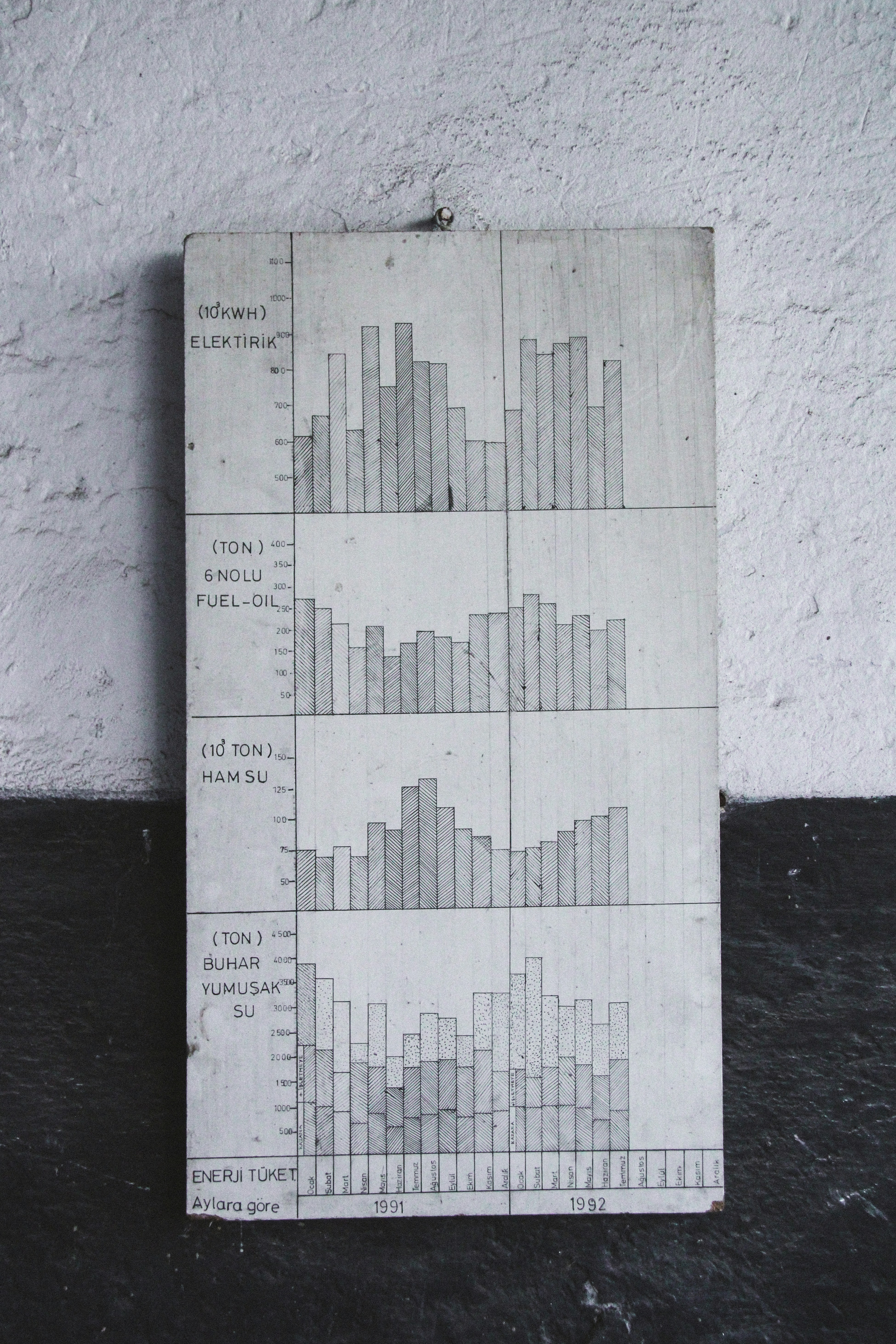

Believe it or not, the idea of benchmarking didn’t start with AI. The practice of comparing performance against a standard has roots in business management, popularized by companies like Xerox in the 1980s. But when it comes to artificial intelligence, the need for a common yardstick became obvious as the field grew.

In the early days, progress was often anecdotal. Researchers would present a new model that seemed “smarter,” but comparing it to others was like comparing apples and oranges. This led to the rise of standardized datasets and challenges.

- The Dawn of Standardized Tests: Early benchmarks like the MNIST database for handwritten digit recognition and ImageNet for object recognition were game-changers. For the first time, the entire research community could compete on a level playing field. The ImageNet Large Scale Visual Recognition Challenge (ILSVRC), launched in 2010, became the “Olympics of AI,” providing an objective measure of progress in computer vision.

- The Language Revolution: As AI tackled human language, benchmarks evolved. The General Language Understanding Evaluation (GLUE) and its tougher successor, SuperGLUE, became the standard for measuring a model’s grasp of language nuances. These tests pushed the limits of Natural Language Processing (NLP) and paved the way for the large language models (LLMs) we see today.

This evolution from simple accuracy tests to complex, multi-faceted evaluations has been crucial. It created a virtuous cycle: better benchmarks drove innovation, and more innovative models demanded even tougher benchmarks.

Unpacking the “Why”: The Crucial Role of AI Benchmarks in Model Efficiency and Performance 🤔

So, why all the fuss? Why can’t we just look at a model’s output and say, “Yep, that looks good”? Here at ChatBench.org™, we know that “good” is subjective. Benchmarks provide the objective, hard data needed to move beyond feelings and into facts. They are essential for informed decision-making, risk mitigation, and gaining a competitive edge.

Why We Can’t Just “Eyeball It”: The Need for Objective Metrics in AI Performance Testing

Imagine trying to build a race car without a speedometer or a stopwatch. You could take it for a spin and say it “feels fast,” but you’d have no real idea if your latest tweak actually improved performance. That’s what evaluating AI without benchmarks is like.

Subjectivity is the enemy of progress. A recent study by METR on developer productivity found a fascinating gap between perception and reality. Experienced developers using AI tools believed they were 20% faster, but in reality, their task completion time increased by 19%! This highlights a critical point: without objective measurement, our intuition can be wildly wrong. Benchmarks provide that objective truth, allowing for fair, consistent, and reproducible evaluations.

Beyond Accuracy: What “Efficiency” Really Means for AI Models and Resource Utilization

In the early days, accuracy was king. But as models have become incredibly accurate, the focus has shifted to a more holistic view of efficiency. After all, a model that’s 99.9% accurate but takes a week to answer a question and costs a fortune to run is practically useless.

Here’s what efficiency truly means in the AI world:

- ✅ Speed (Latency & Throughput): How quickly does the model provide an answer (latency), and how many requests can it handle at once (throughput)? For a real-time chatbot, low latency is non-negotiable.

- ✅ Computational Cost: How much raw processing power does the model need? This is often measured in TOPS (Trillions of Operations Per Second), a key metric for evaluating the hardware that runs the AI, like a Neural Processing Unit (NPU). A higher TOPS number suggests a more powerful processor, but as Qualcomm notes, it doesn’t guarantee optimal real-world performance on its own.

- ✅ Memory Footprint: How much RAM does the model consume? This is critical for deploying models on edge devices like smartphones or IoT sensors.

- ✅ Energy Consumption: How much power does it take to train and run the model? This is not just about cost; it’s about sustainability. As one paper on MLPerf Power notes, the rapid adoption of ML has led to a surge in power consumption, making energy efficiency a critical area of research.

Balancing these factors is the true art of building efficient AI. It’s a constant trade-off, and benchmarks are the tools that help us navigate it.

The Anatomy of a Benchmark: Key Components and Considerations for Robust AI Evaluation 🔬

Not all benchmarks are created equal. A truly robust evaluation framework is built on several key pillars that ensure fairness, consistency, and relevance. Think of it as a recipe: get one ingredient wrong, and the whole dish can be ruined.

Dataset Diversity: Fueling Fair Comparisons and Accuracy Assessment

The heart of any benchmark is its dataset—the questions and examples the AI is tested on. If the dataset is flawed, the results will be meaningless.

- Representativeness: The data must reflect the real-world scenarios the model will encounter. Testing a medical AI on data from only one demographic group is a recipe for biased and potentially harmful outcomes.

- Quality and Cleanliness: The data must be accurate and free from errors. Garbage in, garbage out.

- Avoiding Contamination: A huge challenge is ensuring the test data hasn’t been seen by the model during its training. If a model has already memorized the answers to the test, the benchmark score is useless. This is why many benchmark creators keep their test sets private.

Evaluation Metrics: More Than Just Numbers for AI Model Performance

Metrics are the criteria used to score a model’s performance. While accuracy is the most common, it’s often not enough.

| Metric | What It Measures – |

| Accuracy, Precision, Recall, F1 Score | These are fundamental for classification tasks, giving a nuanced view of how many predictions were correct and how well the model identifies relevant results. – |

| BLEU / ROUGE | Used in NLP for tasks like translation and summarization, these scores measure how similar the model’s generated text is to a human-written reference. – |

| Latency / Throughput | These directly measure the model’s speed and capacity, crucial for real-world applications. – |

| Energy Efficiency | Measured in metrics like (Samples/s) / Watts or 1/Joules, this quantifies the energy cost of a model’s operations, a key focus of benchmarks like MLPerf Power. – |

Standardized Protocols: Leveling the Playing Field for AI Benchmarking Tools

Finally, a good benchmark needs a clear and strict set of rules—an evaluation protocol. This ensures that everyone runs the test in the same way, making comparisons fair and meaningful. It specifies things like:

- How the model should be set up.

- How many examples it can be shown before the test (e.g., “zero-shot” or “few-shot” learning).

- Specific hardware and software configurations.

Organizations like MLCommons, which manages the MLPerf benchmarks, play a crucial role in defining and enforcing these protocols, ensuring the integrity of the results.

Navigating the Labyrinth: Popular AI Benchmarking Suites and Platforms for Machine Learning Evaluation 🗺️

The world of AI benchmarks is vast and ever-expanding. It can be tough to know where to start! Here’s our team’s rundown of some of the most influential and widely used benchmark suites that are shaping the industry.

1. MLPerf: The Gold Standard for AI Performance and Speed Testing

If there’s one name that dominates the conversation around AI performance, it’s MLPerf. Managed by the MLCommons consortium, it’s the industry-standard suite for measuring the performance of machine learning systems, from tiny microcontrollers to massive data centers.

- What it covers: MLPerf has different suites for various tasks, including

Training,Inference,HPC(High-Performance Computing), and evenStorage. - Why it matters for efficiency: MLPerf is obsessed with apples-to-apples comparisons of speed and throughput. More recently, with MLPerf Power, it has become the leading benchmark for measuring energy efficiency, a critical step towards sustainable AI.

- Our take: At ChatBench.org™, we see MLPerf as the definitive benchmark for anyone serious about the hardware and systems aspect of AI. When a company like NVIDIA or Google releases MLPerf results, the whole industry pays attention.

2. GLUE & SuperGLUE: NLP’s Toughest Tests for Language Model Efficiency

For those of us in the world of language models, GLUE (General Language Understanding Evaluation) and its more challenging successor, SuperGLUE, were foundational.

- What it covers: These benchmarks consist of a collection of diverse and difficult language understanding tasks, from sentiment analysis to question answering.

- Why it matters for efficiency: While primarily focused on linguistic capability, topping the GLUE leaderboard required significant computational resources. The race to beat these benchmarks drove innovations in model architecture (like the Transformer) that ultimately led to more efficient designs.

- Our take: Although many models have now surpassed human performance on these tests, their legacy is undeniable. They set the stage for the next generation of more complex language benchmarks.

3. ImageNet & COCO: Visionary Benchmarks for Computer Vision Model Evaluation

In the realm of computer vision, two names stand out: ImageNet and COCO (Common Objects in Context).

- What it covers: ImageNet is famous for its massive image classification task. COCO goes further, evaluating models on object detection, segmentation, and captioning within complex scenes.

- Why it matters for efficiency: The sheer scale of these datasets forced researchers to develop more efficient training methods and more streamlined model architectures to have any hope of competing.

- Our take: These benchmarks are the bedrock of modern computer vision. If you’re building a model that needs to “see,” you’ll almost certainly be testing it against one of these.

4. Hugging Face Benchmarks: Community-Driven Evaluation for Transformers and Beyond

Hugging Face has become the de facto hub for the NLP community, and their approach to benchmarking reflects that.

- What it covers: Hugging Face hosts a massive collection of datasets and models, allowing for easy benchmarking on a wide array of tasks. Their Open LLM Leaderboard is one of the most-watched rankings for open-source language models.

- Why it matters for efficiency: The leaderboard doesn’t just rank models on accuracy; it provides a space for the community to compare different model sizes and architectures, fostering a culture of efficiency.

- Our take: For anyone working with open-source models, the Hugging Face ecosystem is indispensable. It democratizes benchmarking, making it accessible to everyone.

5. Custom Benchmarks: When Off-the-Shelf Won’t Cut It for Your Specific AI Use Case

While public benchmarks are fantastic for general comparison, they often don’t reflect the unique challenges of a specific business problem. That’s where custom benchmarks come in.

- What it covers: A custom benchmark is a test you design yourself, using your own data and tailored to your specific AI Business Applications.

- Why it matters for efficiency: It allows you to measure what truly matters for your ROI. For example, you can evaluate a model’s performance on your company’s internal documents or customer support tickets, providing a much more accurate picture of its real-world efficiency.

- Our take: We’re huge advocates for custom benchmarks. Public leaderboards are a great starting point, but for deployment, nothing beats testing a model on the actual data it will be working with.

The Art of Interpretation: Decoding Benchmark Results for Real-World AI Impact and Scalability 🎨

So you’ve got the scores. Model A beat Model B by 3 points on some obscure benchmark. Time to pop the champagne, right? 🍾 Not so fast. The numbers are only half the story. Interpreting those results correctly is a skill—one that separates the amateur enthusiasts from the seasoned pros.

Understanding Trade-offs: Speed vs. Accuracy vs. Resource Use in AI Model Deployment

There’s no such thing as a free lunch in AI. Improving one metric often means sacrificing another. This is the fundamental trade-off in AI model deployment.

- Accuracy vs. Speed: A larger, more complex model might be more accurate, but it will almost certainly be slower. For an application like real-time fraud detection, a slightly less accurate model that delivers an answer in milliseconds is far more valuable than a perfect model that takes minutes.

- Performance vs. Cost: The highest-performing model might require cutting-edge, expensive hardware like the latest NVIDIA GPUs. You have to ask: is the marginal gain in accuracy worth the exponential increase in compute cost?

- Accuracy vs. Energy Efficiency: As highlighted by MLPerf Power results, pushing for that last 0.1% of accuracy can sometimes double the energy consumption. Is that a trade-off your organization is willing to make?

A benchmark score is a single point in a multi-dimensional space. Your job is to find the point that best aligns with your project’s unique constraints and goals.

Context is King: Applying Benchmarks to Your Specific AI Project and Business Goals

A model that aces a general knowledge benchmark like MMLU might be terrible at understanding your company’s specific jargon. This is why context is everything.

Before you even look at a leaderboard, you need to define your priorities.

- What is the core task? Is it classification, generation, or something else?

- What are the performance requirements? Does it need to be real-time? How many users will it serve?

- What are the resource constraints? Will it run on a massive cloud server or a low-power edge device?

- What does success look like for the business? Does it mean higher customer satisfaction, lower operational costs, or something else?

Only when you have answers to these questions can you begin to interpret benchmark results in a way that’s meaningful for your project.

Avoiding Pitfalls: Common Misinterpretations and How to Sidestep Them in AI Performance Analysis

It’s easy to be led astray by impressive-looking numbers. Here are some common traps we see people fall into:

- ❌ Over-indexing on a Single Metric: Focusing only on accuracy while ignoring latency, cost, and energy use is a classic mistake.

- ❌ Ignoring the Dataset: A model might perform well because the benchmark’s dataset is very similar to its training data. Always question how well the test data represents your real-world use case.

- ❌ Vendor Bias: Be skeptical of benchmarks published by a vendor that only show their product in a favorable light. Always look for independent, third-party evaluations like those from MLCommons.

- ❌ Benchmark “Saturation”: Some older benchmarks, like GLUE, have been “solved,” with top models exceeding human performance. A high score here is less meaningful than it once was.

Our advice: Triangulate your data. Consult multiple benchmark sources, test with your own data, and always, always map the metrics back to your business goals.

Beyond the Hype: The Future of AI Benchmarking, Ethical Considerations, and Green AI 🚀

The world of AI is moving at lightning speed, and the way we measure it is evolving right alongside. The benchmarks of today are already giving way to the more holistic, responsible, and dynamic evaluation frameworks of tomorrow. Here’s what we at ChatBench.org™ see on the horizon.

Addressing Bias and Fairness in AI Evaluation: Building Trustworthy Models

For too long, performance was the only thing that mattered. But we’ve learned the hard way that a high-performing model can also be biased, unfair, and unsafe. The future of benchmarking lies in measuring these critical ethical dimensions.

- Fairness Audits: New benchmarks are emerging that explicitly test for bias across different demographic groups (e.g., race, gender, age). They analyze whether a model’s performance is equitable for everyone.

- Robustness and Adversarial Testing: How does a model behave when faced with unexpected or intentionally misleading inputs? Benchmarks are being designed to probe these weaknesses, ensuring models are reliable under pressure.

- Transparency and Explainability: Can the model explain its reasoning? Future benchmarks will likely score models on their ability to provide clear, understandable justifications for their decisions, a key component of building trust.

The Rise of Green AI: Energy Efficiency Benchmarks and Computational Cost Optimization

The computational cost of training and running large-scale AI models has a very real environmental impact. The “Green AI” movement advocates for a shift in focus, prioritizing energy efficiency alongside traditional performance metrics.

This is where the MLPerf Power benchmark is leading the charge. As a groundbreaking paper from the MLCommons consortium explains, MLPerf Power provides a standardized methodology to evaluate the energy efficiency of ML systems “from microwatts to megawatts.” This allows researchers and companies to:

- ✅ Measure and Compare Energy Use: For the first time, we have a fair way to compare the power consumption of different hardware and software setups.

- ✅ Drive Innovation in Efficiency: By making energy a key metric, the benchmark incentivizes the development of more efficient algorithms, hardware, and cooling systems.

- ✅ Promote Sustainability: As the paper states, this work “lays the foundation for future research in this critical area” and helps in “developing sustainable AI solutions.”

We believe that in the near future, reporting energy efficiency scores will be just as important as reporting accuracy.

Dynamic Benchmarking: Adapting to Evolving AI Models and Research Frontiers

A static benchmark is a snapshot in time. But AI isn’t static—it’s constantly learning and evolving. The future requires benchmarks that can adapt.

- Living Benchmarks: Instead of a fixed set of questions, these benchmarks would continuously update with new data and more challenging tasks, preventing models from simply “memorizing” the test.

- Measuring Generalization: The ultimate goal is Artificial General Intelligence (AGI). Future benchmarks will focus less on narrow tasks and more on a model’s ability to reason, learn new skills, and generalize its knowledge to completely unseen problems.

- Human-in-the-Loop Evaluation: Some capabilities, like creativity or nuanced conversation, are hard to score with an algorithm. We expect to see more evaluation frameworks that incorporate real-time human feedback to provide a more holistic assessment.

Our ChatBench.org™ Perspective: Real-World Anecdotes and Expert Recommendations for AI Model Efficiency 💡

Alright, enough with the theory. Let’s talk about what this looks like in the trenches. Here at ChatBench.org™, we’re not just reporting on benchmarks; we’re using them every single day to push the boundaries of what’s possible.

Our Triumphs and Tribulations: Learning from Our Own Benchmarking Journeys

I remember a project a while back where we were developing a new chatbot for a large e-commerce client. We had two candidate models. Model A was a behemoth—it was topping all the public LLM Benchmarks for conversational accuracy. Model B was smaller, leaner, and its scores were, frankly, just “good.”

On paper, Model A was the clear winner. But then we ran our custom benchmark. We fed both models 10,000 real (anonymized) customer support logs from our client. The results were shocking.

- Model A, despite its high general-knowledge score, struggled with the specific product names and industry jargon. It was accurate but slow, with high latency.

- Model B, the underdog, was much faster. And after a bit of fine-tuning on the client’s data, its accuracy on the specific task actually surpassed Model A’s.

The lesson was clear: public benchmarks get you in the ballpark, but custom benchmarks get you a home run. We deployed Model B, and the client saw a massive reduction in customer wait times and a huge boost in satisfaction. It was a win for efficiency, driven by a smart benchmarking strategy.

Top Tips from Our ML Engineers: Best Practices for Robust AI Model Evaluation

Over the years, we’ve developed a set of core principles for evaluating AI model efficiency. We’re happy to share our playbook with you:

- Define “Efficient” First: Before you look at a single model, define what efficiency means for your project. Write down your targets for latency, throughput, cost, and power consumption.

- Start Broad, Then Go Deep: Use broad, public benchmarks like MLPerf or the Open LLM Leaderboard to create a shortlist of promising models. This is your initial filter.

- Build a Relevant Gauntlet: Create a custom benchmark using your own data. This is the most important step. Make it tough! Include the tricky edge cases and weird queries that your model will inevitably face in the real world.

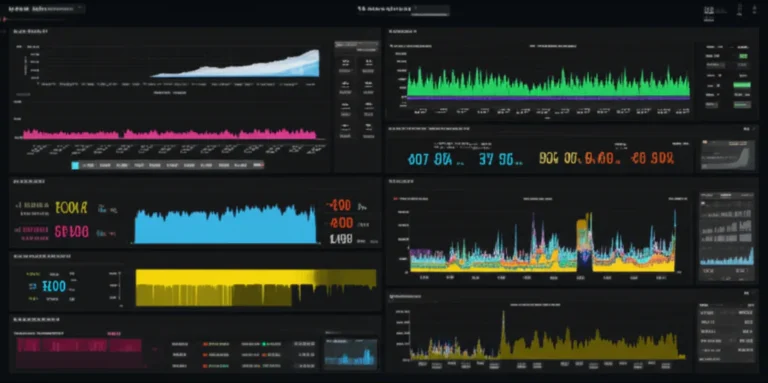

- Measure Holistically: Don’t just score for accuracy. Instrument your tests to measure everything: latency, memory usage, and if possible, power draw. You need the full picture to make an informed decision. Check out our Model Comparisons for examples of how we do this.

- Think About Scalability: Test how the model performs under load. A model that’s fast with one user might crumble when hit with a thousand concurrent requests.

- Benchmark Continuously: AI is not a “set it and forget it” technology. Models can drift over time as data changes. Re-run your benchmarks regularly to catch performance degradation before it impacts your users.

Conclusion: The Unsung Heroes of AI Progress and Innovation 🎯

After this deep dive, one thing is crystal clear: AI benchmarks are the unsung heroes that drive innovation, efficiency, and trust in artificial intelligence. They transform subjective hype into objective insight, enabling researchers, engineers, and businesses to make informed decisions that balance accuracy, speed, cost, and sustainability.

Remember our tale of the chatbot showdown? That story perfectly illustrates why context matters. Public benchmarks like MLPerf and GLUE are invaluable for initial comparisons, but custom benchmarks tailored to your unique data and use case are the ultimate litmus test for efficiency and real-world impact.

Benchmarks also shine a light on the complex trade-offs inherent in AI development. Whether it’s juggling latency against energy consumption or accuracy against cost, these standardized tests help you navigate the labyrinth with confidence.

Looking ahead, the future of benchmarking is bright and evolving. Ethical considerations, fairness audits, and green AI metrics are becoming front and center. The rise of dynamic, adaptive benchmarks will keep pace with the rapid evolution of AI models, ensuring that evaluation remains relevant and robust.

So, next time you see a flashy leaderboard or a jaw-dropping accuracy number, ask yourself: What’s behind the score? How does it align with my goals? That’s the secret sauce to turning AI insight into a competitive edge.

Recommended Links: Dive Deeper into AI Evaluation and Benchmarking 📚

Ready to explore more? Here are some curated resources and shopping links to fuel your AI benchmarking journey:

- MLPerf Official Website: mlcommons.org

- GLUE Benchmark: gluebenchmark.com

- SuperGLUE Benchmark: super.gluebenchmark.com

- ImageNet Challenge: image-net.org

- COCO Dataset: cocodataset.org

- Hugging Face Open LLM Leaderboard: huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard

- NVIDIA MLPerf Results: nvidia.com/data-center/mlperf

- Google TPU MLPerf Benchmarks: cloud.google.com/tpu/docs/mlperf-benchmarks

👉 Shop AI Hardware and Tools on Amazon:

- NVIDIA GPUs: Amazon NVIDIA GPU Search

- Arduino UNO (for Tiny ML power measurement): Amazon Arduino UNO

- Books on AI Benchmarking and Efficiency:

- “Efficient Processing of Deep Neural Networks” by Vivienne Sze et al. Amazon Link

- “Deep Learning” by Ian Goodfellow, Yoshua Bengio, and Aaron Courville (classic foundational text) Amazon Link

- “Sustainable AI: AI for Climate and Environment” by various authors Amazon Link

FAQ: Your Burning Questions About AI Benchmarks Answered 🔍

What are the key metrics used in AI benchmarks to assess model performance?

AI benchmarks use a variety of metrics depending on the task:

- Accuracy, Precision, Recall, F1 Score: Common for classification tasks, these metrics evaluate how well a model predicts correct labels and balances false positives and negatives.

- BLEU and ROUGE: Used in natural language processing to assess the quality of generated text compared to human references.

- Latency and Throughput: Measure how fast a model responds and how many requests it can handle simultaneously, critical for real-time applications.

- Energy Efficiency: Metrics like samples per watt or joules per inference quantify the power consumption of running AI models, increasingly important for sustainable AI.

- TOPS (Trillions of Operations Per Second): A hardware-level metric indicating raw computational capacity, especially for NPUs.

These metrics together provide a multi-dimensional view of model performance, enabling nuanced evaluation beyond just “accuracy.”

How do AI benchmarks influence the development of more efficient AI algorithms?

Benchmarks act as a compass and catalyst for innovation:

- They set clear targets for researchers, pushing them to optimize models not just for accuracy but also for speed, memory, and power consumption.

- By providing standardized, comparable results, benchmarks encourage competition and collaboration across academia and industry.

- The rise of energy-focused benchmarks like MLPerf Power has accelerated research into quantization, pruning, and efficient architectures that reduce computational cost without sacrificing performance.

- Benchmarks also expose trade-offs and bottlenecks, guiding engineers to design algorithms that better balance competing demands.

In short, benchmarks help transform theoretical advances into practical, deployable AI solutions.

Can AI benchmarks predict real-world performance of AI models?

Benchmarks provide valuable insights but are not perfect predictors of real-world performance:

- Public benchmarks often use curated datasets that may not fully represent the diversity and complexity of real-world data.

- Models might perform well on benchmarks due to overfitting or dataset leakage, but struggle with unseen or domain-specific inputs.

- Real-world deployment involves additional factors like network latency, hardware constraints, and user behavior that benchmarks may not capture.

- That’s why custom benchmarks using your own data and scenarios are essential for accurate evaluation.

Benchmarks are best viewed as guiding tools rather than absolute guarantees.

What role do AI benchmarks play in comparing different AI technologies?

AI benchmarks provide a common language for comparing diverse AI technologies:

- They enable apples-to-apples comparisons between different model architectures (e.g., Transformers vs. CNNs), hardware platforms (e.g., GPUs vs. NPUs), and software frameworks (e.g., TensorFlow vs. PyTorch).

- Benchmarks highlight strengths and weaknesses, helping stakeholders choose the right technology for their needs.

- They also foster transparency and accountability by exposing vendor claims to independent verification.

- For example, the MLPerf benchmark suite is widely respected for its rigorous, vendor-neutral evaluation of hardware and software stacks.

By standardizing evaluation, benchmarks accelerate the adoption of the most efficient and effective AI technologies.

How do energy efficiency benchmarks impact AI sustainability efforts?

Energy efficiency benchmarks like MLPerf Power are game-changers for sustainable AI:

- They quantify the environmental impact of AI workloads, making energy consumption a first-class metric alongside accuracy and speed.

- This transparency drives innovation in low-power hardware, efficient algorithms, and cooling technologies.

- Organizations can make informed decisions balancing performance with carbon footprint, aligning AI development with global sustainability goals.

- As AI scales, these benchmarks will be critical in regulating and incentivizing greener AI practices.

How can organizations create effective custom AI benchmarks?

Creating a custom benchmark involves:

- Defining clear objectives: What specific tasks and performance aspects matter most?

- Collecting representative data: Use real-world, high-quality data that reflects the target use case.

- Choosing relevant metrics: Include accuracy, latency, memory, and energy consumption as appropriate.

- Establishing standardized protocols: Fix hardware/software settings and evaluation procedures to ensure consistency.

- Running thorough tests: Include edge cases and stress tests to assess robustness.

- Iterating and refining: Update benchmarks as requirements evolve.

Custom benchmarks provide actionable insights that public benchmarks cannot, enabling tailored AI solutions.

Reference Links: Our Sources and Further Reading on AI Model Efficiency 🔗

- Qualcomm: A Guide to AI TOPS and NPU Performance Metrics — qualcomm.com

- MLCommons: MLPerf Power Benchmark — mlcommons.org

- METR Study: Measuring the Impact of Early-2025 AI on Experienced Open-Source Developers — metr.org/blog/2025-07-10-early-2025-ai-experienced-os-dev-study

- MLPerf Official Site — mlcommons.org

- GLUE Benchmark — gluebenchmark.com

- SuperGLUE Benchmark — super.gluebenchmark.com

- ImageNet Challenge — image-net.org

- COCO Dataset — cocodataset.org

- Hugging Face Open LLM Leaderboard — huggingface.co

- NVIDIA MLPerf Results — nvidia.com

- Google TPU MLPerf Benchmarks — cloud.google.com

Thanks for sticking with us! If you want to explore more about how AI benchmarks can be used to compare the performance of different AI frameworks, check out our detailed article here: Can AI benchmarks be used to compare the performance of different AI frameworks?