Support our educational content for free when you purchase through links on our site. Learn more

How AI Benchmarks for NLP & Computer Vision Differ in 10 Key Ways 🚀

Have you ever wondered why an AI model that nails language tasks might stumble when analyzing images? Or why the metrics that crown a champion in natural language processing (NLP) seem worlds apart from those in computer vision (CV)? At ChatBench.org™, we’ve cracked the code on how AI benchmarks for these two powerhouse domains differ—and why understanding these differences can give you a serious edge in AI development and deployment.

In this article, we’ll unpack 10 major differences between NLP and CV benchmarks, from the very nature of tokens and parameters to the evaluation metrics and datasets that shape model performance. Along the way, we’ll share insider tips on choosing the right benchmarks for your projects, explore emerging multimodal benchmarks that blur the lines, and reveal why chasing leaderboard scores alone might lead you astray. Curious how your favorite AI models stack up or how to interpret those cryptic benchmark numbers? Stick around—we’ve got you covered!

Key Takeaways

- NLP and CV benchmarks differ fundamentally due to the nature of their data: sequential text vs. spatial images.

- Tokens in NLP are words or subwords, while in CV they are pixels or image patches, shaping model architectures and evaluation.

- Evaluation metrics vary widely: NLP uses BLEU, ROUGE, and perplexity; CV relies on mAP, IoU, and accuracy.

- Benchmarking tools and datasets are specialized, reflecting unique challenges like semantic ambiguity in NLP and visual occlusion in CV.

- Multimodal benchmarks are emerging, testing AI’s ability to integrate language and vision, but a unified metric remains elusive.

- Choosing the right benchmark requires aligning with your project’s goals and data domain, not just picking the highest score.

Ready to decode the AI benchmarking puzzle and turn those numbers into actionable insights? Let’s dive in!

Table of Contents

- ⚡️ Quick Tips and Facts About AI Benchmarks

- 📜 The Evolution of AI Benchmarks: From NLP to Computer Vision

- 🔍 Understanding AI Benchmarks: What Are They and Why Do They Matter?

- 🧠 NLP Benchmarks Demystified: Key Metrics and Popular Datasets

- 👁️ Computer Vision Benchmarks Explained: Metrics, Datasets, and Challenges

- ⚔️ 10 Major Differences Between NLP and Computer Vision Benchmarks

- 🛠️ How Benchmarking Tools and Frameworks Differ for NLP vs. Computer Vision

- 📊 Interpreting Benchmark Results: What to Look for in NLP and CV Models

- 🤖 Real-World Impact: How Benchmarks Influence AI Model Development and Deployment

- 🧩 Tokens, Parameters, and Their Role in NLP Benchmarking

- 🖼️ Pixels, Features, and Parameters: The Backbone of Computer Vision Benchmarks

- 🔄 Cross-Domain Benchmarking: Can NLP and CV Metrics Ever Align?

- 💡 Tips for Choosing the Right Benchmark for Your AI Project

- 🚀 Future Trends: Where Are AI Benchmarks Headed?

- 🎯 Conclusion: Mastering AI Benchmarks for NLP and Computer Vision

- 🔗 Recommended Links for Deep Dives on AI Benchmarks

- ❓ Frequently Asked Questions About AI Benchmarks

- 📚 Reference Links and Resources

Here is the main content for your article, crafted by the expert team at ChatBench.org™.

⚡️ Quick Tips and Facts About AI Benchmarks

Welcome! You’ve landed at ChatBench.org™, where we turn AI insight into your competitive edge. Before we dive deep into the fascinating world of AI benchmarks, let’s get you up to speed with some quick-fire facts. Think of this as your cheat sheet!

- What’s a Benchmark? 벤치마크는 AI 모델의 성능을 측정하기 위한 표준화된 테스트입니다. It’s like an SAT exam for algorithms, giving them a score so we can compare them. As noted in a featured video on the topic, they are standardized frameworks for assessing LLM performance.

- NLP vs. CV: The Core Difference: The biggest distinction lies in the data. Natural Language Processing (NLP) benchmarks test a model’s ability to understand and generate text 🗣️. Computer Vision (CV) benchmarks, on the other hand, evaluate how well a model can interpret and analyze images and videos 🖼️.

- It’s All About the “Tokens”: In AI, a “token” is the basic unit of data. For NLP, a token might be a word, part of a word, or a character. For computer vision, a token could be a pixel or a small patch of an image. This fundamental difference shapes everything that follows.

- Different Tasks, Different Metrics: You can’t grade a poetry essay with a math formula, right? Similarly, NLP uses metrics like BLEU (for translation) and Perplexity (for language modeling), while CV relies on mAP (mean Average Precision for object detection) and Accuracy (for classification).

- Model Architectures Diverge: The tools for the job are different. NLP models often use Transformer architectures (like in GPT-4) to handle sequential data. CV has historically relied on Convolutional Neural Networks (CNNs) to process spatial, pixel-based data.

- Performance Isn’t Directly Comparable: A score of 90 on an NLP benchmark isn’t the same as a 90 on a CV benchmark. As the European Commission’s AI Watch notes, “performance reached in a specific task cannot be directly compared with that for other tasks, as they tend to have a very different nature.”

📜 The Evolution of AI Benchmarks: From NLP to Computer Vision

To truly grasp the differences, we need to take a quick trip down memory lane. Here at ChatBench.org™, we’ve seen this field explode, and it’s been a wild ride!

In the early days, AI evaluation was… let’s say, ad-hoc. Researchers would test their models on small, proprietary datasets. It was the wild west of AI! 🤠

The Rise of Standardized Testing

Then came the game-changers. For computer vision, the ImageNet Large Scale Visual Recognition Challenge (ILSVRC), launched in 2010, was a watershed moment. It provided a massive, standardized dataset of over a million labeled images, creating a common ground for competition and innovation. The success of deep neural networks like AlexNet on ImageNet in 2012 is what kicked off the modern AI revolution.

For NLP, the journey was more gradual. Early benchmarks focused on specific tasks like part-of-speech tagging or sentiment analysis. But the real shift came with multi-task benchmarks like the General Language Understanding Evaluation (GLUE) and its tougher successor, SuperGLUE. These benchmarks forced models to be good at a range of linguistic tasks, pushing the development of more versatile and powerful Large Language Models (LLMs).

This history matters because it shows how the benchmarks themselves drove progress. The challenges posed by ImageNet led to breakthroughs in CNNs, while the complexity of GLUE fueled the rise of the Transformer architecture.

🔍 Understanding AI Benchmarks: What Are They and Why Do They Matter?

So, what exactly is a benchmark? At its core, an AI benchmark consists of three key components, a concept well-explained in this overview video:

- A Dataset: A curated collection of data (text, images, etc.) that the model will be tested on.

- A Task: A specific problem to solve using the dataset (e.g., classify these images, answer these questions).

- A Metric: A quantitative way to measure the model’s performance on the task (e.g., accuracy, F1-score).

Why are they so crucial?

- ✅ Objectivity: They provide a standardized way to compare different models, removing subjective bias. It’s how we know that Google’s Gemini might outperform an earlier model on a specific reasoning task.

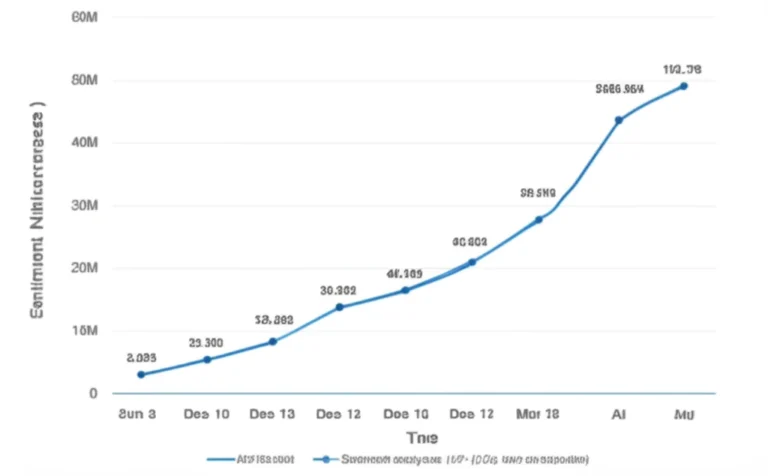

- ✅ Progress Tracking: They allow the entire AI community to measure progress over time. We can see clear trend lines showing how models have improved.

- ✅ Driving Innovation: By setting a high bar, benchmarks push researchers to develop new techniques and architectures.

- ✅ Informing Decisions: For businesses, benchmarks are vital for choosing the right model for their needs. Our work in AI Business Applications often starts with analyzing benchmark performance.

However, they’re not perfect. As the video points out, benchmarks can be too specific, leading to models that “teach to the test” (overfitting), and they have a finite lifespan as models eventually max them out.

🧠 NLP Benchmarks Demystified: Key Metrics and Popular Datasets

Alright, let’s talk language! NLP is all about teaching machines to understand the nuances, context, and structure of human language. It’s a messy, beautiful, and incredibly complex challenge. To learn more, check out our deep dive on the most widely used AI benchmarks for natural language processing tasks.

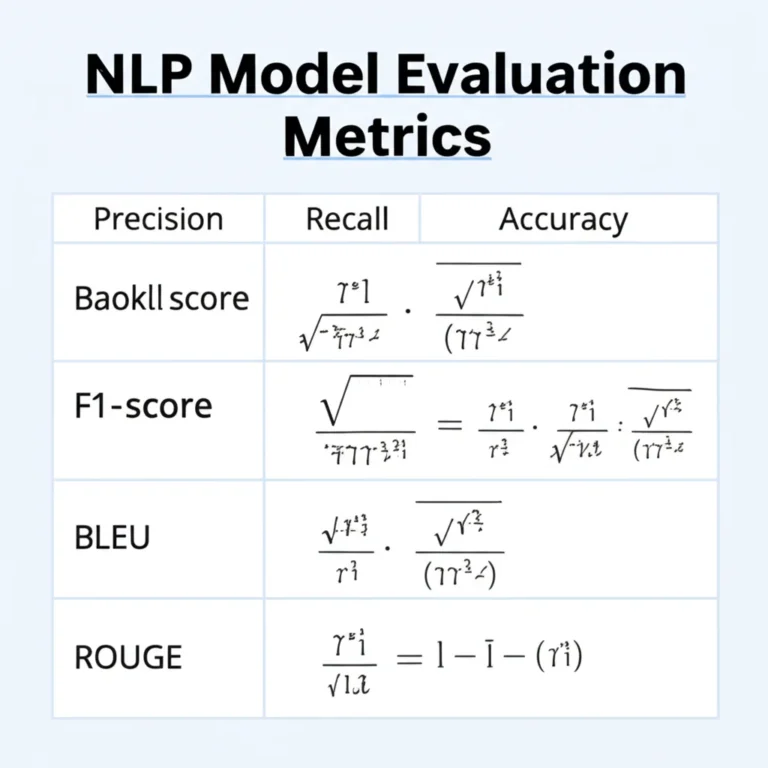

Key NLP Metrics

- Perplexity (PPL): Measures how “surprised” a language model is by a sequence of text. Lower is better. It’s a good indicator of a model’s fluency.

- BLEU Score (Bilingual Evaluation Understudy): Primarily used for machine translation. It compares the machine-generated translation to one or more human translations and measures the overlap. Higher is better.

- ROUGE (Recall-Oriented Understudy for Gisting Evaluation): Often used for summarization tasks. It measures the overlap between the model-generated summary and a human-created one. Higher is better.

- F1-Score: The harmonic mean of precision and recall. It’s a great all-around metric for classification tasks where you care about both false positives and false negatives (like sentiment analysis). Higher is better.

- Accuracy: The good old percentage of correct predictions. Simple and effective for tasks like text classification.

Popular NLP Benchmark Suites

These aren’t just single datasets; they are collections of diverse tasks designed to test a model’s overall language prowess.

| Benchmark Suite | Key Focus | Example Tasks | Popular Models Tested |

|---|---|---|---|

| GLUE | General language understanding | Sentiment analysis, sentence similarity, inference | BERT, RoBERTa, T5 |

| SuperGLUE | More challenging language understanding | Reading comprehension, coreference resolution | DeBERTa, T5, GPT-3 |

| SQuAD | Question answering | Answering questions based on a given Wikipedia passage | BERT, XLNet, ALBERT |

| MMLU | Massive multitask language understanding | 57 tasks covering STEM, humanities, social sciences, etc. | GPT-4, Claude 3, Gemini |

👁️ Computer Vision Benchmarks Explained: Metrics, Datasets, and Challenges

Now, let’s switch from words to pictures. Computer vision is about giving machines the sense of sight. This field involves tasks that are trivial for humans (like recognizing a cat in a photo) but incredibly complex for a computer, which just sees a grid of numbers (pixels).

Key Computer Vision Metrics

- Top-1 & Top-5 Accuracy: Used in image classification. Top-1 accuracy means the model’s single best guess must be correct. Top-5 accuracy means the correct label must be within the model’s top five guesses.

- Intersection over Union (IoU): The go-to metric for object detection and segmentation. It measures the overlap between the model’s predicted bounding box and the ground-truth bounding box. A higher IoU means a more accurate prediction.

- mean Average Precision (mAP): The king of object detection metrics. It calculates the average precision across all object categories and various IoU thresholds, giving a comprehensive single-number score for a model’s performance.

- Fréchet Inception Distance (FID): Used to evaluate the quality of images generated by models like DALL-E or Midjourney. It measures the similarity between the distribution of generated images and real images. Lower is better.

Landmark Computer Vision Datasets

| Dataset | Primary Task | Description | Key Models Tested |

|---|---|---|---|

| ImageNet | Image Classification | Over 14 million images across 20,000+ categories. The classic benchmark. | AlexNet, VGG, ResNet, ViT |

| COCO | Object Detection, Segmentation | “Common Objects in Context.” Features 330K images with complex scenes. | YOLO, Faster R-CNN, Mask R-CNN |

| PASCAL VOC | Object Classification, Detection | A smaller but highly influential dataset with 20 object classes. | R-CNN, SSD |

| CIFAR-10/100 | Image Classification | A set of 60,000 small 32×32 pixel images, often used for initial model testing. | Various CNN architectures |

⚔️ 10 Major Differences Between NLP and Computer Vision Benchmarks

Okay, this is the main event! You understand the basics of both, but where do they truly diverge? Let’s break it down, point by point.

-

Data Modality & Structure:

- NLP: Deals with discrete, symbolic data (words, characters). The data is sequential and has a grammatical structure.

- CV: Deals with continuous, numerical data (pixel values). The data is spatial (2D or 3D) and has a topographical structure.

-

The Nature of “Tokens”:

- NLP: Tokens are meaningful units like words or subwords (e.g., “un-happi-ly”). The process of tokenization is a critical first step.

- CV: “Tokens” are less abstract. They can be individual pixels or, more commonly in modern architectures like Vision Transformers (ViTs), patches of an image.

-

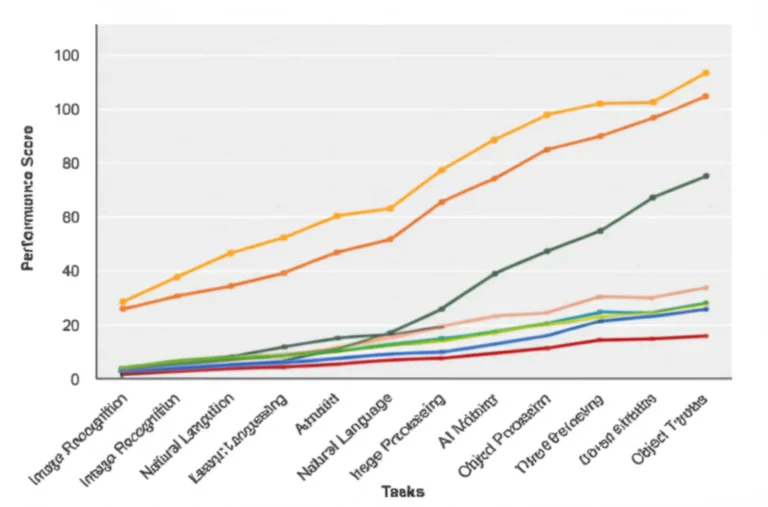

Core Tasks & Goals:

- NLP: Focuses on understanding meaning, context, and intent. Tasks include translation, summarization, and sentiment analysis.

- CV: Focuses on identifying and localizing objects, patterns, and features in visual space. Tasks include classification, detection, and segmentation.

-

Key Evaluation Metrics:

- NLP: Metrics often grapple with the subjectivity of language. BLEU and ROUGE, for instance, measure overlap with human references, which is an imperfect proxy for quality.

- CV: Metrics are often more objective. An object is either in a bounding box or it isn’t. IoU and mAP provide very concrete, geometrically-grounded scores.

-

Dominant Model Architectures:

- NLP: The Transformer architecture reigns supreme due to its ability to handle long-range dependencies in sequential data via self-attention mechanisms.

- CV: Convolutional Neural Networks (CNNs) have been dominant for their efficiency in learning hierarchical features from spatial data. However, Transformers (ViTs) are making huge inroads here too.

-

Handling Ambiguity:

- NLP: Must resolve semantic ambiguity. Does “bank” mean a financial institution or a river’s edge? Context is everything.

- CV: Must resolve visual ambiguity. This includes challenges like object occlusion (something is blocking the view), varying lighting conditions, and different camera angles.

-

Human Annotation Complexity:

- NLP: Annotating data can be highly subjective. Is a movie review positive, negative, or neutral? Two humans might disagree.

- CV: Annotation is often more straightforward but can be incredibly tedious. Drawing precise bounding boxes or pixel-level masks for thousands of images is a monumental effort.

-

Computational Workloads:

- NLP: Large language models have enormous parameter counts, making training incredibly expensive. The memory footprint is often dominated by these parameters.

- CV: High-resolution images and videos create massive input data sizes, demanding high-speed data pipelines and significant VRAM for processing. As one guide on AI workloads notes, CV requires “powerful computational resources for real-time processing of image/video data.”

-

Role of Pre-training:

- NLP: Pre-training on vast amounts of unlabeled text (e.g., the entire internet) is the standard. The model learns grammar, facts, and reasoning skills before being fine-tuned.

- CV: Pre-training on large datasets like ImageNet is also common, but the “knowledge” learned is about visual features (edges, textures, shapes) rather than abstract concepts.

-

Testing for “Common Sense”:

- NLP: Benchmarks increasingly test for common-sense reasoning. For example, a model should know that “if you drop a glass, it will likely break.”

- CV: Common sense is tested visually. A model should recognize that a car on a roof is an unusual scene or understand the spatial relationships between objects.

🛠️ How Benchmarking Tools and Frameworks Differ for NLP vs. Computer Vision

The right tool for the right job makes all the difference. While foundational frameworks like Google’s TensorFlow and Meta’s PyTorch are used for both domains, the ecosystems built around them are specialized.

For the NLP Practitioner ✍️

If you’re working in NLP, your toolkit is likely dominated by Hugging Face. Their transformers library is the de facto standard for accessing pre-trained models. Their datasets and evaluate libraries make it incredibly simple to load benchmark datasets and compute standard metrics. It’s a one-stop shop for NLP benchmarking.

For the Computer Vision Engineer 📸

In the CV space, the landscape is a bit more fragmented. While Hugging Face also has excellent support for vision models, you’ll often see more direct use of framework-specific libraries:

torchvision: A package within PyTorch that provides popular datasets, model architectures, and image transformations for computer vision.tf.keras.applications: Provides pre-trained models like VGG16, ResNet50, and MobileNet directly within TensorFlow.- OpenCV: An essential library for real-time computer vision and image processing tasks that often precedes the deep learning step.

The key difference? NLP tooling is often centered around a unified model hub, while CV tooling is more distributed across foundational libraries and specialized tools for image manipulation.

📊 Interpreting Benchmark Results: What to Look for in NLP and CV Models

So you’re looking at a leaderboard. Model A has a 95.4 on SuperGLUE and Model B has a 92.1 mAP on COCO. What does it all mean?

First, context is king. A high score is impressive, but it’s not the whole story.

- Don’t Compare Apples and Oranges: As we’ve established, a score on an NLP benchmark is not comparable to a CV score. They measure fundamentally different abilities.

- Look at the Sub-tasks: A model might ace the overall benchmark but fail on a specific sub-task that’s critical for your application. Dig into the detailed results! For example, in our Model Comparisons, we always break down performance by category.

- Beware of Benchmark Overfitting: A model might be specifically tuned to excel on a popular benchmark but fail to generalize to real-world data that’s slightly different. This is a huge “gotcha” in the industry.

- Consider Efficiency: The top-performing model might require a supercomputer to run. A slightly lower-scoring but much more efficient model (like a distilled version) might be a better practical choice. The European Commission’s AI Watch rightly points out that a global evaluation must consider factors like energy consumption and algorithmic efficiency.

A personal anecdote: We were once evaluating a question-answering model for a client. It had a state-of-the-art score on the SQuAD benchmark. But when we deployed it on the client’s internal documents, its performance tanked! Why? The benchmark used Wikipedia articles with clean, well-structured text. The client’s data was messy, full of jargon and typos. The lesson? Always validate benchmark performance on your own data.

🤖 Real-World Impact: How Benchmarks Influence AI Model Development and Deployment

This isn’t just an academic exercise. Benchmarks have a massive, tangible impact on the AI products you use every day.

- Better Search Engines: The progress on NLP benchmarks like MMLU directly translates to how well search engines like Google or Bing can understand your complex queries and provide relevant answers.

- Smarter Virtual Assistants: When Siri, Alexa, or Google Assistant get better at understanding your commands (a task measured by language understanding benchmarks), it’s a direct result of the research driven by these tests.

- Safer Autonomous Vehicles: The performance of object detection models on benchmarks like COCO is critical for a self-driving car’s ability to identify pedestrians, other vehicles, and traffic signs. Higher mAP scores can literally save lives.

- More Powerful Creative Tools: Generative AI models for both text and images are evaluated with their own benchmarks. A better FID score for an image model like Midjourney means more realistic and higher-quality images for artists and designers.

The relentless pursuit of higher benchmark scores in the research community creates a ripple effect, leading to more capable and reliable AI applications for everyone.

🧩 Tokens, Parameters, and Their Role in NLP Benchmarking

Let’s zoom in on the building blocks. As one excellent overview explains, tokens are the inputs, and parameters are the learned “control settings” that interpret them. In NLP, this relationship is everything.

What are Tokens in NLP?

Imagine you have the sentence: “AI benchmarking is fascinating!”

- Word Tokens:

['AI', 'benchmarking', 'is', 'fascinating', '!'] - Subword Tokens (BPE):

['AI', 'benchmark', 'ing', 'is', 'fascinat', 'ing', '!']

The choice of tokenization strategy is a crucial step in any Fine-Tuning & Training process. Subword tokenization, used by models like BERT and GPT, is powerful because it can handle rare words and reduces the overall vocabulary size.

What are Parameters in NLP?

Parameters are the weights and biases within the neural network. A model like GPT-3 has 175 billion of them! During training, the model adjusts these billions of parameters to minimize its error on the training data. A well-trained set of parameters allows the model to understand that “king” – “man” + “woman” is close to “queen.”

When an NLP model is run on a benchmark, it’s the quality of these learned parameters, interpreting the input tokens, that determines the final score.

🖼️ Pixels, Features, and Parameters: The Backbone of Computer Vision Benchmarks

Now, let’s look at the same concepts through a computer vision lens.

From Pixels to Features

A computer vision model doesn’t “see” a cat. It sees a 2D array of pixel values. The magic of a CNN is how it uses parameters (in this case, the values in its convolutional filters or “kernels”) to learn to recognize hierarchical features.

- Layer 1: The first set of parameters might learn to detect simple edges and color gradients.

- Layer 2: The next layer combines these edges to recognize more complex shapes and textures (like fur or an eye).

- Deeper Layers: Subsequent layers combine these shapes to recognize object parts (a cat’s ear, a tail) and eventually, the entire object.

The Role of Parameters in CV

The parameters in a CV model are the learned representation of the visual world. When a model like ResNet-50 is trained on ImageNet, its millions of parameters are adjusted to become expert feature detectors. The benchmark score (e.g., Top-1 Accuracy) is a direct measure of how well these parameters can generalize to recognize objects in new, unseen images.

🔄 Cross-Domain Benchmarking: Can NLP and CV Metrics Ever Align?

This is the million-dollar question, isn’t it? For years, the two fields ran on parallel tracks. But now, with the rise of multimodal models like OpenAI’s GPT-4V and Google’s Gemini, the lines are blurring. These models can understand both text and images simultaneously.

So, how do we benchmark a model that can look at a picture of a refrigerator’s contents and write you a recipe?

This is a frontier in AI research. New benchmarks are emerging that specifically test these cross-domain capabilities:

- Visual Question Answering (VQA): A model is given an image and a text-based question about it (e.g., “What color is the car?”). The metric is simple accuracy.

- Image Captioning: A model must generate a descriptive text caption for an image. This is often evaluated with NLP metrics like BLEU or CIDEr, which compare the generated caption to human references.

- Text-to-Image Generation: How do you score a model like Stable Diffusion? This is tough. It often involves a combination of automated metrics (like FID score) and human evaluation to judge creativity, relevance, and quality.

While the underlying metrics might still be rooted in their original domains (e.g., using BLEU for captioning), the tasks themselves force a holistic evaluation. We don’t have a single, unified “intelligence score” yet, but benchmarks are evolving to measure this powerful new class of models.

💡 Tips for Choosing the Right Benchmark for Your AI Project

Feeling overwhelmed by the options? Don’t be. Choosing the right benchmark is about aligning the test with your goal. Here are some pro tips from our Developer Guides.

- Define Your Core Task: What is the single most important thing your AI needs to do? If it’s a customer service chatbot, prioritize question-answering and dialogue benchmarks. If it’s a medical imaging tool, focus on segmentation and classification benchmarks with relevant medical datasets.

- Consider Your Data Domain: A model that excels on news articles (a common benchmark dataset) might struggle with legal documents or social media slang. Look for benchmarks that use data similar to your own, or better yet, create a small evaluation set from your actual data.

- Balance Performance with Efficiency: Don’t just grab the model at the top of the leaderboard. Check its size, inference speed, and computational requirements. A smaller, faster model with 95% of the performance might be the smarter business decision.

- Look for Robustness and Fairness Benchmarks: Standard benchmarks test for accuracy, but what about bias or performance under adversarial conditions? Specialized benchmarks like HellaSwag (for common-sense NLP) or ImageNet-C (for corruption robustness in CV) can reveal weaknesses standard tests miss.

- Start Broad, Then Go Deep: Use a broad, multi-task benchmark like GLUE (for NLP) or a general classification benchmark like ImageNet (for CV) to get an initial feel for a model’s capabilities. Then, drill down with more specific benchmarks that match your exact use case.

🚀 Future Trends: Where Are AI Benchmarks Headed?

The world of AI benchmarking is constantly moving. Standing still means falling behind. Here’s what we at ChatBench.org™ see on the horizon:

- Dynamic and Adversarial Benchmarks: Static benchmarks get “solved.” The future is in dynamic benchmarks that constantly evolve. Platforms like Dynabench allow humans to try and trick models, creating a dataset that specifically targets their weaknesses.

- Real-World Interaction: We’re moving beyond static question-answering. The next wave of benchmarks will evaluate AI agents on their ability to perform multi-step tasks in simulated environments, like booking a flight or using software tools.

- More Holistic Evaluation: There’s a growing consensus that a single score isn’t enough. Future leaderboards will likely incorporate metrics for efficiency, robustness, fairness, and transparency alongside raw performance.

- The End of the “One True Benchmark”: As models become more general, the idea of a single benchmark to crown the “best” model is fading. The future is specialized, with a suite of benchmarks used to create a more nuanced performance profile. As the video we mentioned earlier astutely notes, benchmarks have a “finite lifespan,” and new ones must constantly be developed as AI advances.

The game is changing from a simple sprint to a complex, multi-event decathlon. And we’ll be here to help you make sense of the scores

🎯 Conclusion: Mastering AI Benchmarks for NLP and Computer Vision

Phew! We’ve journeyed through the fascinating landscape of AI benchmarks, uncovering how those for natural language processing (NLP) differ fundamentally from those for computer vision (CV). From the very nature of tokens—words and subwords versus pixels and patches—to the distinct architectures, metrics, and challenges, the divide is clear and profound.

But here’s the kicker: these differences aren’t limitations—they’re strengths. They reflect the unique demands of language and vision, pushing AI researchers to innovate in tailored ways. As AI models grow more multimodal, blending text and images, benchmarks are evolving too, creating exciting new frontiers.

Remember our earlier question about whether NLP and CV metrics could ever align? The answer is a cautious yes. Emerging multimodal benchmarks like Visual Question Answering and Image Captioning hint at a future where AI’s understanding transcends single modalities, but for now, the best practice is to choose benchmarks that align closely with your project’s domain and goals.

For businesses and developers, the takeaway is clear: don’t just chase leaderboard scores blindly. Understand the nuances behind the numbers, validate models on your own data, and balance performance with efficiency and robustness. That’s how you turn AI benchmarks from mere numbers into a competitive edge.

At ChatBench.org™, we’re excited to continue guiding you through this evolving terrain, helping you pick the right tools, interpret the scores, and build AI solutions that truly deliver.

🔗 Recommended Links for Deep Dives on AI Benchmarks

Ready to explore further? Here are some curated resources and products to help you dive deeper or get started with your own AI benchmarking journey.

Benchmarking Frameworks and Libraries

-

Hugging Face Transformers & Datasets:

Amazon Search: Hugging Face Books | Hugging Face Official -

PyTorch & torchvision:

Amazon Search: PyTorch Books | PyTorch Official -

TensorFlow & tf.keras:

Amazon Search: TensorFlow Books | TensorFlow Official

Popular AI Hardware for Benchmarking

-

NVIDIA GPUs (e.g., RTX 4090, A100):

Amazon Search: NVIDIA GPUs | NVIDIA Official -

Cloud GPU Providers:

- Paperspace: Paperspace AI GPU Instances

- RunPod: RunPod GPU Cloud

- DigitalOcean: DigitalOcean GPU Droplets

Books on AI Benchmarks and Model Evaluation

-

“Deep Learning” by Ian Goodfellow, Yoshua Bengio, and Aaron Courville — The classic deep dive into neural networks and their evaluation.

Amazon Link -

“Natural Language Processing with Transformers” by Lewis Tunstall, Leandro von Werra, and Thomas Wolf — A practical guide to state-of-the-art NLP models and benchmarking.

Amazon Link -

“Computer Vision: Algorithms and Applications” by Richard Szeliski — A comprehensive resource on CV techniques and evaluation.

Amazon Link

❓ Frequently Asked Questions About AI Benchmarks

What are the key metrics used in AI benchmarks for natural language processing versus computer vision?

NLP Metrics:

NLP benchmarks rely heavily on metrics that evaluate linguistic quality and understanding. Common metrics include:

- Perplexity: Measures how well a language model predicts a sample. Lower perplexity indicates better fluency.

- BLEU and ROUGE: Evaluate the overlap between generated and reference texts, useful for translation and summarization.

- F1-Score and Accuracy: Used for classification tasks like sentiment analysis or named entity recognition.

CV Metrics:

Computer vision benchmarks focus on spatial and detection accuracy:

- Top-1 and Top-5 Accuracy: For classification tasks, measuring if the correct label is within the top predictions.

- Intersection over Union (IoU): Measures overlap between predicted and true bounding boxes in detection tasks.

- mean Average Precision (mAP): Aggregates precision across classes and IoU thresholds, standard for object detection.

- Fréchet Inception Distance (FID): Assesses quality of generated images.

These metrics reflect the fundamental differences in data type and task complexity between NLP and CV.

How do dataset requirements differ between NLP and computer vision AI benchmarks?

NLP Dataset Requirements:

- Must capture the diversity of language, including syntax, semantics, and pragmatics.

- Often requires large corpora of text with annotations for specific tasks (e.g., question-answer pairs, sentiment labels).

- Annotation can be subjective, requiring expert human judgment, especially for nuanced tasks like sarcasm detection.

Computer Vision Dataset Requirements:

- Need vast collections of labeled images or videos, often with pixel-level annotations for segmentation or bounding boxes for detection.

- Annotation is labor-intensive but more objective, involving drawing precise shapes or labeling objects.

- Must cover diverse conditions: lighting, occlusion, angles, and backgrounds to ensure model robustness.

The nature of the data and annotation complexity drives distinct dataset design and curation strategies.

Why is interpretability more challenging in NLP AI benchmarks compared to computer vision?

Interpretability in NLP:

Language models process sequential, symbolic data with complex dependencies and ambiguities. The meaning of a sentence can hinge on subtle context, idioms, or cultural references. This makes it difficult to trace exactly why a model made a particular prediction or generated a specific output. Additionally, tokenization strategies and embedding spaces add layers of abstraction.

Interpretability in CV:

While still challenging, CV models often learn hierarchical visual features (edges, textures, shapes) that can be visualized as activation maps or saliency maps. This spatial structure allows researchers to better understand what parts of an image influenced a decision. Tools like Grad-CAM provide intuitive visual explanations.

Thus, the abstract, high-dimensional nature of language makes NLP interpretability a tougher nut to crack.

How can businesses leverage differences in AI benchmarks to gain a competitive edge?

Businesses can use benchmark insights to:

- Select Models Tailored to Their Domain: Understanding which benchmarks align with their data and tasks helps avoid costly mismatches.

- Balance Performance and Efficiency: Choosing models that perform well on relevant benchmarks but also meet latency and resource constraints.

- Anticipate Model Limitations: Benchmarks reveal weaknesses (e.g., bias, robustness) that can inform risk management and compliance.

- Drive Innovation: Benchmarking internal models against public standards encourages continuous improvement and innovation.

- Communicate Value: Demonstrating benchmark performance can build customer trust and differentiate products in competitive markets.

At ChatBench.org™, we help businesses decode these nuances to turn AI benchmarking into strategic advantage.

What role do tokens and parameters play in differentiating NLP and CV benchmarks?

Tokens and parameters are foundational to AI models but differ in nature between NLP and CV:

- Tokens: In NLP, tokens are discrete language units (words, subwords), crucial for capturing syntax and semantics. In CV, tokens are pixels or image patches representing spatial information.

- Parameters: Both domains rely on learned parameters (weights and biases) that interpret tokens. However, NLP models often have massive parameter counts focused on sequence modeling (e.g., Transformers), while CV models focus on spatial feature extraction (e.g., CNN filters).

These differences shape benchmark design, evaluation metrics, and model architectures.

How do multimodal AI benchmarks challenge traditional NLP and CV evaluation methods?

Multimodal benchmarks test models that process and integrate multiple data types (e.g., text + images). This challenges traditional benchmarks because:

- Metrics must capture cross-domain understanding (e.g., accuracy in answering questions about images).

- Evaluation requires combining NLP metrics (like BLEU) with CV metrics (like IoU or FID).

- Human evaluation often becomes necessary to assess creativity, coherence, and relevance.

This emerging area pushes the boundaries of AI benchmarking and demands new methodologies.

📚 Reference Links and Resources

- European Commission AI Watch: Performance of AI Research

- Functionize Blog: Understanding Tokens and Parameters in Model Training

- Cloudian Guide: 6 Types of AI Workloads, Challenges & Critical Best Practices

- Hugging Face Official: https://huggingface.co/

- PyTorch Official: https://pytorch.org/

- TensorFlow Official: https://www.tensorflow.org/

- NVIDIA Official: https://www.nvidia.com/en-us/

- Paperspace Cloud GPUs: https://www.paperspace.com/

- RunPod GPU Cloud: https://www.runpod.io/

- DigitalOcean GPU Droplets: https://www.digitalocean.com/products/gradient/gpu-droplets

We hope this comprehensive guide empowers you to navigate the complex but rewarding world of AI benchmarks with confidence and clarity. Stay tuned to ChatBench.org™ for more expert insights!